`

| |

|

Monday, February 9th, 2009

Original Code:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| Public Function Process(ByVal headers As HeaderCollection, ByVal factory As HeadersFactory) As Boolean

On Error GoTo ErrorHandler

Dim i As Long

Dim header As Header

Dim receivedHeader As ReceivedHeader

For i = 1 To headers.Count

Set header = headers.Item(i)

If (Trim(header.HeaderKey) = "Received") Then

Set receivedHeader = factory.CreateReceivedHeader(header)

If Not receivedHeader.IsIntranetServer Then

If receivedHeader.IsTrustedRecipient Then

headers.ourHeadersExistBefore (i)

Exit For

End If

End If

End If

Next

Exit Function

ErrorHandler:

Err.Raise Err.Number, Err.Source & vbCrLf & "HeadersProcessor.Process", Err.Description |

Public Function Process(ByVal headers As HeaderCollection, ByVal factory As HeadersFactory) As Boolean

On Error GoTo ErrorHandler

Dim i As Long

Dim header As Header

Dim receivedHeader As ReceivedHeader

For i = 1 To headers.Count

Set header = headers.Item(i)

If (Trim(header.HeaderKey) = "Received") Then

Set receivedHeader = factory.CreateReceivedHeader(header)

If Not receivedHeader.IsIntranetServer Then

If receivedHeader.IsTrustedRecipient Then

headers.ourHeadersExistBefore (i)

Exit For

End If

End If

End If

Next

Exit Function

ErrorHandler:

Err.Raise Err.Number, Err.Source & vbCrLf & "HeadersProcessor.Process", Err.Description The nested if-else blocks bother me. Also the code does not communicate what is happening clearly.

Refactored Code With Goto:

1

2

| Private receivedHeader As ReceivedHeader

Private recievedHeaderIndex As Long |

Private receivedHeader As ReceivedHeader

Private recievedHeaderIndex As Long 1

2

3

4

5

6

7

8

9

10

| Public Function Process(ByVal headers As HeaderCollection, ByVal factory As HeadersFactory) As Boolean

On Error GoTo ErrorHandler

Call ExtractFirstReceivedHeader(headers, factory)

If receivedHeader Is Nothing Then Exit Function

If receivedHeader.IsTrustedRecipient Then

headers.ourHeadersExistBefore (recievedHeaderIndex)

End If

Exit Function

ErrorHandler:

Err.Raise Err.Number, Err.Source & vbCrLf & "HeadersProcessor.Process", Err.Description |

Public Function Process(ByVal headers As HeaderCollection, ByVal factory As HeadersFactory) As Boolean

On Error GoTo ErrorHandler

Call ExtractFirstReceivedHeader(headers, factory)

If receivedHeader Is Nothing Then Exit Function

If receivedHeader.IsTrustedRecipient Then

headers.ourHeadersExistBefore (recievedHeaderIndex)

End If

Exit Function

ErrorHandler:

Err.Raise Err.Number, Err.Source & vbCrLf & "HeadersProcessor.Process", Err.Description 1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| Private Sub ExtractFirstReceivedHeader(ByVal headers As HeaderCollection, ByVal factory As HeadersFactory)

On Error GoTo ErrorHandler

Dim i As Long

Dim header As Header

For i = 1 To headers.Count

Set header = headers.Item(i)

If (Trim(header.HeaderKey) <> "Received") Then <strong>GoTo Continue</strong>

If NotAnIntranetServer(header, i, factory) Then GoTo FinishedProcessing

<strong>Continue</strong>:

Next

FinishedProcessing:

Set header = Nothing

Exit Sub |

Private Sub ExtractFirstReceivedHeader(ByVal headers As HeaderCollection, ByVal factory As HeadersFactory)

On Error GoTo ErrorHandler

Dim i As Long

Dim header As Header

For i = 1 To headers.Count

Set header = headers.Item(i)

If (Trim(header.HeaderKey) <> "Received") Then <strong>GoTo Continue</strong>

If NotAnIntranetServer(header, i, factory) Then GoTo FinishedProcessing

<strong>Continue</strong>:

Next

FinishedProcessing:

Set header = Nothing

Exit Sub 1

2

| ErrorHandler:

Err.Raise Err.Number, Err.Source & vbCrLf & "HeadersProcessor.SetFirstReceivedHeader", Err.Description |

ErrorHandler:

Err.Raise Err.Number, Err.Source & vbCrLf & "HeadersProcessor.SetFirstReceivedHeader", Err.Description 1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| Private Function NotAnIntranetServer(ByVal header As Header, currentIndex As Long, ByVal factory As HeadersFactory) As Boolean

On Error GoTo ErrorHandler

Set receivedHeader = factory.CreateReceivedHeader(header)

If receivedHeader.IsIntranetServer = False Then

recievedHeaderIndex = currentIndex

NotAnIntranetServer = True

Else

Set receivedHeader = Nothing

NotAnIntranetServer = False

End If

Exit Function

ErrorHandler:

Err.Raise Err.Number, Err.Source & vbCrLf & "HeadersProcessor.NotAnIntranetServer", Err.Description

End Function |

Private Function NotAnIntranetServer(ByVal header As Header, currentIndex As Long, ByVal factory As HeadersFactory) As Boolean

On Error GoTo ErrorHandler

Set receivedHeader = factory.CreateReceivedHeader(header)

If receivedHeader.IsIntranetServer = False Then

recievedHeaderIndex = currentIndex

NotAnIntranetServer = True

Else

Set receivedHeader = Nothing

NotAnIntranetServer = False

End If

Exit Function

ErrorHandler:

Err.Raise Err.Number, Err.Source & vbCrLf & "HeadersProcessor.NotAnIntranetServer", Err.Description

End Function 1

2

3

| Private Sub Class_Terminate()

Set receivedHeader = Nothing

End Sub |

Private Sub Class_Terminate()

Set receivedHeader = Nothing

End Sub

Posted in Agile, Testing | 8 Comments »

Monday, February 9th, 2009

Old Test Code:

1

2

3

4

5

6

| [Test]

public void ShouldCheckRecipientOfReceivedHeaderIsIntranetServer()

{

SetHeaderCollectionCount(2);

Header header1 = CreateMockHeaderAndSetExpectation("Key");

Header header2 = CreateMockHeaderAndSetExpectation("Received"); |

[Test]

public void ShouldCheckRecipientOfReceivedHeaderIsIntranetServer()

{

SetHeaderCollectionCount(2);

Header header1 = CreateMockHeaderAndSetExpectation("Key");

Header header2 = CreateMockHeaderAndSetExpectation("Received"); 1

2

| collection.Stub(x => x.get_Item(1)).Return(header1);

collection.Stub(x => x.get_Item(2)).Return(header2); |

collection.Stub(x => x.get_Item(1)).Return(header1);

collection.Stub(x => x.get_Item(2)).Return(header2); 1

2

| factory.Expect(x => x.CreateReceivedHeader(header2)).Return(receivedHeader);

receivedHeader.Expect(x=> x.IsIntranetServer()).Return(true); |

factory.Expect(x => x.CreateReceivedHeader(header2)).Return(receivedHeader);

receivedHeader.Expect(x=> x.IsIntranetServer()).Return(true); 1

2

3

| processor.Process(collection,factory);

receivedHeader.VerifyAllExpectations();

} |

processor.Process(collection,factory);

receivedHeader.VerifyAllExpectations();

} 1

2

3

4

5

6

| [Test]

public void ShouldCheckIfRecipientOfReceivedHeaderCanBeTrusted()

{

SetHeaderCollectionCount(2);

Header header1 = CreateMockHeaderAndSetExpectation("Key");

Header header2 = CreateMockHeaderAndSetExpectation("Received"); |

[Test]

public void ShouldCheckIfRecipientOfReceivedHeaderCanBeTrusted()

{

SetHeaderCollectionCount(2);

Header header1 = CreateMockHeaderAndSetExpectation("Key");

Header header2 = CreateMockHeaderAndSetExpectation("Received"); 1

2

3

4

| factory.Expect(x => x.CreateReceivedHeader(header2)).Return(receivedHeader);

collection.Stub(x => x.get_Item(1)).Return(header1);

collection.Stub(x => x.get_Item(2)).Return(header2);

receivedHeader.Stub(x => x.IsIntranetServer()).Return(false); |

factory.Expect(x => x.CreateReceivedHeader(header2)).Return(receivedHeader);

collection.Stub(x => x.get_Item(1)).Return(header1);

collection.Stub(x => x.get_Item(2)).Return(header2);

receivedHeader.Stub(x => x.IsIntranetServer()).Return(false); 1

2

| receivedHeader.Expect(x => x.IsTrustedRecipient()).Return(true);

processor.Process(collection, factory); |

receivedHeader.Expect(x => x.IsTrustedRecipient()).Return(true);

processor.Process(collection, factory); 1

2

| receivedHeader.VerifyAllExpectations();

} |

receivedHeader.VerifyAllExpectations();

} Can you understand what is happening out here? Well it took me quite some time to understand it all. The first thing that caught my attention was the amount of duplication. All this duplication was getting in the way for me to see what was the real difference between the two tests.

Also I was bothered by the fact that the test contained calls to methods at various different levels of abstraction. I really like creating a veneer of domain specific language (method calls) that the rest of my code interacts with. .i.e. method containing calls to methods which are at similar level of abstraction.

Here is what I came up with to make it easy to communicate the intent. Also I tried to hide all the unnesscary stubbing logic. Not worth it staring in my face.

Refactored Code:

1

2

3

4

5

| [Test]

public void RecipientOfReceivedHeaderBelongingToIntranetServerIsIgnored()

{

AddHeader("MessageId");

AddHeader("Received").FromIntranetServer(); |

[Test]

public void RecipientOfReceivedHeaderBelongingToIntranetServerIsIgnored()

{

AddHeader("MessageId");

AddHeader("Received").FromIntranetServer(); 1

2

3

4

5

| [Test]

public void TrustedRecipientOfReceivedHeaderIsAccepted()

{

AddHeader("MessageId");

AddHeader("Received").FromInternetServer().WhichIsTrusted(); |

[Test]

public void TrustedRecipientOfReceivedHeaderIsAccepted()

{

AddHeader("MessageId");

AddHeader("Received").FromInternetServer().WhichIsTrusted(); 1

2

3

4

5

| [TearDown]

public void VerifyExceptations()

{

receivedHeader.VerifyAllExpectations();

} |

[TearDown]

public void VerifyExceptations()

{

receivedHeader.VerifyAllExpectations();

} 1

2

3

4

5

| private WhenHeadersAreProcessed FromInternetServer()

{

receivedHeader.Stub(x => x.IsIntranetServer()).Return(false);

return this;

} |

private WhenHeadersAreProcessed FromInternetServer()

{

receivedHeader.Stub(x => x.IsIntranetServer()).Return(false);

return this;

} 1

2

3

4

5

| private WhenHeadersAreProcessed FromIntranetServer()

{

receivedHeader.Expect(x => x.IsIntranetServer()).Return(true);

return this;

} |

private WhenHeadersAreProcessed FromIntranetServer()

{

receivedHeader.Expect(x => x.IsIntranetServer()).Return(true);

return this;

} 1

2

3

4

| private void WhichIsTrusted()

{

receivedHeader.Expect(x => x.IsTrustedRecipient()).Return(true);

} |

private void WhichIsTrusted()

{

receivedHeader.Expect(x => x.IsTrustedRecipient()).Return(true);

} 1

2

3

4

5

6

| private void Process()

{

SetHeaderCollectionCount(headerCount);

factory.Expect(x => x.CreateReceivedHeader(null)).IgnoreArguments().Return(receivedHeader);

processor.Process(collection, factory);

} |

private void Process()

{

SetHeaderCollectionCount(headerCount);

factory.Expect(x => x.CreateReceivedHeader(null)).IgnoreArguments().Return(receivedHeader);

processor.Process(collection, factory);

} 1

2

3

4

| private void SetHeaderCollectionCount(int count)

{

collection.Expect(x => x.Count).Return(count);

} |

private void SetHeaderCollectionCount(int count)

{

collection.Expect(x => x.Count).Return(count);

} 1

2

3

4

5

6

| private WhenHeadersAreProcessed AddHeader(string key)

{

Header header = CreateMockHeaderAndSetExpectation(key);

collection.Stub(x => x.get_Item(++this.headerCount)).Return(header);

return this;

} |

private WhenHeadersAreProcessed AddHeader(string key)

{

Header header = CreateMockHeaderAndSetExpectation(key);

collection.Stub(x => x.get_Item(++this.headerCount)).Return(header);

return this;

} Also see: Fluent Interfaces improve readability of my Tests

Posted in Agile, Design, Testing | 2 Comments »

Monday, February 9th, 2009

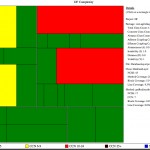

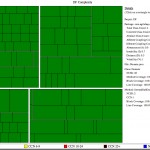

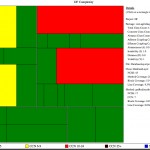

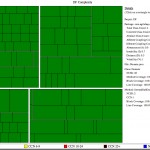

Some time back, I spent 1 Week helping a project (Server written in Java) clear its Technical Debt. The code base is tiny because it leverages lot of existing server framework to do its job. This server handles extremely high volumes of data & request and is a very important part of our server infrastructure. Here are some results:

| Topic |

Before |

After |

| Project Size |

Production Code

- Package =1

- Classes =4

- Methods = 15 (average 3.75/class)

- LOC = 172 (average 11.47/method and 43/class)

- Average Cyclomatic Complexity/Method = 3.27

Test Code

- Package =0

- Classes = 0

- Methods = 0

- LOC = 0

|

Production Code

- Package = 4

- Classes =13

- Methods = 68 (average 5.23/class)

- LOC = 394 (average 5.79/method and 30.31/class)

- Average Cyclomatic Complexity/Method = 1.58

Test Code

- Package = 6

- Classes = 11

- Methods = 90

- LOC =458

|

| Code Coverage |

- Line Coverage: 0%

- Block Coverage: 0%

|

- Line Coverage: 96%

- Block Coverage: 97%

|

| Cyclomatic Complexity |

|

|

| Obvious Dead Code |

Following public methods:

- class DatabaseLayer: releasePool()

Total: 1 method in 1 class |

Following public methods:

- class DFService: overloaded constructor

Total: 1 method in 1 class

Note: This method is required by the tests. |

| Automation |

|

|

| Version Control Usage |

- Average Commits Per Day = 0

- Average # of Files Changed Per Commit = 12

|

- Average Commits Per Day = 7

- Average # of Files Changed Per Commit = 4

|

| Coding Convention Violation |

96 |

0 |

Another similar report.

Posted in Agile, Coaching, Design, Metrics, Planning, Programming, Testing, Tools | No Comments »

Sunday, February 1st, 2009

Programming is “the action or process of writing computer programs”.

Programming by definition encompasses analysis, design , coding, testing, debugging, profiling and a whole lot of other activities. Beware Coding is NOT Programming. Depending on which school of thought you belong to, you will define the relationship and boundaries between these various activities.

For Example:

- In a waterfall world, each activity is a phase and you want a clear sign-off between each phases. Also these phases are sequential by nature with very limited or no feedback. Hence you are expected to have the full design in place before you can code. Else, what do you code?

- In RUP (so-called Iterative and Incremental model) even though it follows a spiral model with some feedback cycle every 3 months or so, one is expected to have the overall architecture of the project and a documented design (in UML notation) of the subset of use cases planned for the current spiral ready before the construction (coding) phase.

- In the unconventional model (where we don’t have process & tool servants and team members can do what they think is most appropriate in the given context), we fail to understand these sequential, rigid processes. We have burnt our fingers way too many times trying to retrofit ourselves into this sequential, well-defined process boundaries guarded by process police. So we have given up the hope that we’ll ever be as smart as the rest of the “coding community” and have chosen a different route.

So how do we design systems then?

- Some of us start with a test (not all, but just one) to understand/clarify what we are trying to build.

- While others might write some prototype code (read it as throw away code) to understand what needs to be build.

- Some teams also start by building a paper prototype (low-fidelity prototype) of what they plan to build and jump straight to the keyboard to validate their thought process (at least once very few hours).

- Yet some others use plain old index cards to model the system and start writing a test to put their thoughts in an assertive medium.

This is just the tip of the iceberg. There are a million ways people program systems. We seem to use a lot of these practices in conjunction (because they are not mutually exclusive practices and can actually be done in parallel).

People who are successful in this model have recognized that they are dealing with a complex adaptive system (CAS) and not a complicated system, where you can define rigid boundaries and be successful. In a CAS, there are multiple ways to do something and if someone makes a claim that you always have to do X before Y, we can sense the desire of putting rigid constraints which by nature are fragile. This is the same reason why there is no such thing called Best Practices in our dictionary. Instead we keep an eye on emerging patterns. If we want to see a particular pattern impact the system, we introduce attractors. But if we don’t want a pattern to impact our system we disrupt that pattern. (rip-off from Dave Snowden, creator of the Cynefin model and leading personality in Knowledge Management Community)

The open source community in general, is yet another classic example which fits into the unconventional category. I’ve never been on an open source project where we had a design phase. People live and breath evolutionary design. At best you might have a simple wiki defining some guidelines.

Anyway, I’m not saying that upfront design is bad. All I’m saying is, don’t tell me that one always has to design first. In CAS, you tend to “Probe-Sense-Respond” and not “Analyze-Respond”. In software development “Action precedes Clarity”, almost always.

Posted in Agile, Design, Open Source, Programming, Testing | No Comments »

Wednesday, January 14th, 2009

Have you come across developers who think that having a separate Quality Assurance (QA) team, who could test (manually or auto-magically) their code/software at the end of an iteration/release, will really help them? Personally I think this style of software development is not just dangerous but also harmful to the developers’ growth.

Having a QA Team that tests (inspects) the software after it’s built, gives me an impression that you can slap inspection at the end of any process and improve the quality of your product. Unfortunately things don’t work this way. What you want to do is build quality into the process rather than inspecting (checking) at the end of your process to assure quality.

Let me give you an example of what I mean by “building quality into the process“.

Back in the good old days, it was typical for a cloth manufacturer to have 10-15 power looms. They would set up these looms at the beginning of the day and let them run for the day. At the end of the day, they would take all the cloth produced by the looms and hand it over to another team (separate QA team) who would check each cloth for defect.

There were multiple sources of defects. At times one of the threads would break creating a defect in the cloth. At times insects would sit on the thread and would also get woven into the cloth creating a defect. And so on. Checking the cloth at the end of the day was turning out to be very expensive for the cloth manufactures. Basically they were trying to create quality products by inspecting the cloth at the end of the process. This is similar to the QA process in a waterfall project.

Since this was not working out, they hired a lot of people to watch each loom. Best case, there would be one person per loom watching for defects. As soon as a thread would break, they would stop the loom, fix the thread and continue. This certainly helped to reduce the defects, but was not an optimal solution for several reasons:

- It was turning out to be quite expensive to have one person per loom

- People at the looms would take breaks during the day and they would either stop the loom during their break (production hit) or would take the risk of letting some defects slip.

- It become very dependent on how closely these folks watched the loom. In other words, the quality of the cloth was very dependent on the capability of the person (good eyesight and keen attention) inspecting the loom.

- and so on

As you can see, what we are trying to do here is move the quality assurance process upstream. Trying to build quality into the manufacturing process. This is similar to the traditional Agile process where you have a couple of dedicated QAs on each team, who check for defects during or at the end of the iteration.

The next step which really helped fix this issue, to a great extent, was a ground breaking innovation by Toyoda Looms. As early as 1885 Sakichi Toyoda worked on improving looms.

One of his initial innovation was to introduce a small lever on each thread. As soon as the thread would break, the lever would go and jam the loom. They went on to introduce noteworthy inventions such as automatic thread replenishment without any drop in the weaving speed, non-stop shuttle change motion, etc. Now a days, you can find looms with sensors which detect insect or other dirt on the threads and so on.

Basically what happened in the loom industry is they introduced various small mechanisms to be part of the loom which prevents the defect from being introduced in the first place. In other words, as and when they found issues with the process, they mistake proofed it by stopping it at source. They built quality into the process by shifting their focus from Quality Assurance to Quality Control. This is what you see in some really good product companies where they don’t really have a separate QA team. They focus on how can we eliminate/reduce the chances of introduction of defects rather than how can we detect defects (which is wasteful).

Hence its important that we focus on Quality Control rather than Quality Assurance. The terms “quality assurance” and “quality control” are often used interchangeably to refer to ways of ensuring the quality of a service or product. The terms, however, have different meanings.

Assurance: The act of giving confidence, the state of being certain or the act of making certain.

Quality assurance: The planned and systematic activities implemented in a quality system so that quality requirements for a product or service will be fulfilled.

Control: An evaluation to indicate needed corrective responses; the act of guiding a process in which variability is attributable to a constant system of chance causes.

Quality control: The observation techniques and activities used to fulfill requirements for quality.

So think about it, do you really need a separate QA team? What are you doing on the lines of Quality Control?

IMHO, in the late 90’s eXtreme Programming really pushed the envelope on this front. With wonderful practices like Automated Acceptance Testing, Test Driven Development, Pair Programming and Continuous Integration, I finally think we are getting closer. Having continuous/frequent working sessions with your customers/users is another great way of building quality into the process.

Lean Startup practices like Continuous Deployment and A/B Testing take this one step further and are really effective in tightening the feedback cycle for measuring user behavior in real context.

As more and more companies are embracing these methods, its becoming clear that we can do away with the concept of a separate QA team or an independent testing team.

Richard Sharpe made a great interview of Jean Tabaka and Bob Martin on the lean concept of “ceasing inspections”. In this 7 minute video, Jean and Bob support the idea of preventing defects upfront rather than at the end. Quality Assurance vs Quality Control

Posted in Agile, Continuous Deployment, Lean Startup, Testing | 31 Comments »

Saturday, December 27th, 2008

Recently I was working on a Domain Forwarding Server. For Ex. if you request for http://google.com and Google wants to redirect you to http://google.in, then this server handles the redirection for you. There are many permutations and combination of how you want to forward your domain to another domain.

First pass, I wrote a tests:

1

2

3

4

5

6

7

8

| @Test

public void permanentlyRedirectDomainsWithPath() {

when(request.hostName()).thenReturn("google.com");

when(request.protocol()).thenReturn("HTTP/1.1");

when(request.path()).thenReturn("/index.html");

domain.setDomain("google.com");

domain.forwardPath(true);

domain.setForward("google.in"); |

@Test

public void permanentlyRedirectDomainsWithPath() {

when(request.hostName()).thenReturn("google.com");

when(request.protocol()).thenReturn("HTTP/1.1");

when(request.path()).thenReturn("/index.html");

domain.setDomain("google.com");

domain.forwardPath(true);

domain.setForward("google.in"); 9

| response = service.processMessage(request); |

response = service.processMessage(request); 10

11

12

13

| assertStatus(StatusCode.PermanentRedirect);

assertLocation("google.in/index.html");

assertStandardResponseHeader();

} |

assertStatus(StatusCode.PermanentRedirect);

assertLocation("google.in/index.html");

assertStandardResponseHeader();

} Note that since the request is an expensive object to build, I’m stubbing it out. While domain is more of a value object in this case, so its not stubbed/mocked out.

This test has a lot of noise. All the stubbing logic was just making this test very difficult to understand what was going on. So I extracted some methods to make it more meaningful and hide the stubbing logic.

1

2

3

4

5

6

7

| @Test

public void permanentlyRedirectDomainsWithPath() {

setDomainToBeRedirected("google.com");

setDestinationDomain("google.in");

shouldRedirectWithPath();

setHostName("google.com");

setPath("/index.html"); |

@Test

public void permanentlyRedirectDomainsWithPath() {

setDomainToBeRedirected("google.com");

setDestinationDomain("google.in");

shouldRedirectWithPath();

setHostName("google.com");

setPath("/index.html"); 8

| response = service.processMessage(request); |

response = service.processMessage(request); 9

10

11

12

| assertStatus(StatusCode.PermanentRedirect);

assertLocation("google.in/index.html");

assertStandardResponseHeader();

} |

assertStatus(StatusCode.PermanentRedirect);

assertLocation("google.in/index.html");

assertStandardResponseHeader();

} Looking at this code it occurred to me that I could use Fluent Interfaces and make this really read like natural language. So finally I got:

1

2

3

4

| @Test

public void permanentlyRedirectDomainsWithPath() {

request("google.com").withPath("/index.html");

redirect("google.com").withPath().to("google.in"); |

@Test

public void permanentlyRedirectDomainsWithPath() {

request("google.com").withPath("/index.html");

redirect("google.com").withPath().to("google.in"); 5

| response = service.processMessage(request); |

response = service.processMessage(request); 6

7

8

9

| assertStatus(StatusCode.PermanentRedirect);

assertLocation("google.in/index.html");

assertStandardResponseHeader();

} |

assertStatus(StatusCode.PermanentRedirect);

assertLocation("google.in/index.html");

assertStandardResponseHeader();

} 10

11

12

13

14

| private ThisTest request(String domainName) {

when(request.hostName()).thenReturn(domainName);

when(request.protocol()).thenReturn("HTTP/1.1");

return this;

} |

private ThisTest request(String domainName) {

when(request.hostName()).thenReturn(domainName);

when(request.protocol()).thenReturn("HTTP/1.1");

return this;

} 15

16

17

| private void withPath(String path) {

when(request.path()).thenReturn(path);

} |

private void withPath(String path) {

when(request.path()).thenReturn(path);

} 18

19

20

21

| private ThisTest redirect(String domainName) {

domain.setDomain(domainName);

return this;

} |

private ThisTest redirect(String domainName) {

domain.setDomain(domainName);

return this;

} 22

23

24

25

| private ThisTest withPath() {

domain.forwardPath(true);

return this;

} |

private ThisTest withPath() {

domain.forwardPath(true);

return this;

} 26

27

28

| private void to(String domainName) {

domain.setForward(domainName);

} |

private void to(String domainName) {

domain.setForward(domainName);

} Finally after introducing Context Objects, I was able to make the code even more easier to read and understand:

1

2

3

4

5

6

7

8

9

| @Test

public void redirectSubDomainsPermanently() {

lets.assume("google.com").getsRedirectedTo("google.in").withPath();

response = domainForwardingServer.process(requestFor("google.com/index.html"));

lets.assertThat(response).contains(StatusCode.PermanentRedirect)

.location("google.in/index.html").protocol("HTTP/1.1")

.connectionStatus("close").contentType("text/html")

.serverName("Directi Server 2.0");

} |

@Test

public void redirectSubDomainsPermanently() {

lets.assume("google.com").getsRedirectedTo("google.in").withPath();

response = domainForwardingServer.process(requestFor("google.com/index.html"));

lets.assertThat(response).contains(StatusCode.PermanentRedirect)

.location("google.in/index.html").protocol("HTTP/1.1")

.connectionStatus("close").contentType("text/html")

.serverName("Directi Server 2.0");

}

Posted in Agile, Testing | 2 Comments »

Monday, September 22nd, 2008

I’m about to make a rather controversial statement now: “Developers who use purely inside-out avatar of TDD, .i.e. use unit tests to drive their development, are really using tests to validate their design and not so much to drive the design”.

The reason I think this way is because in my experience, developers who drive development using unit tests already have a mental model, whiteboard model, CRC card model, etc. of their design. Unit tests really helps them validate that design. (This is clearly not same as BUFD (Big Upfront Design). In this context its micro-design, design of a small feature not the whole system). Of course there is some amount of driving the design as well. When developers find out that the design they had thought about does not really fit well, they evolve it and use unit tests to get feedback. But as you can see the intent is not really to discover a new design by writing the test first.

Is this wrong? Is this a sign that you are a poor developer? Absolutely no. Its perfectly fine to do so and it also works really well in some cases for some people. But I want to highlight that there is another approach to influence design as well.

In my experience, using an Acceptance tests helps better with driving/discovering micro-design. When your test is not in the implementation land, when its in the defining-the-problem-land, there are better chances of tests helping you to discover the design.

While TDD is great at driving/discovering micro-design, Prototyping is great as driving/discovering macro-design.

Posted in Agile, Design, Testing | No Comments »

Monday, September 22nd, 2008

At the Agile 2008 conference in Toronto, Bill Wake and I faciliatated a workshop on Styles of TDD (had to change the name from “Avatars of TDD”, based on feedback from people that most people don’t know the word Avatar). You can find a quick summary of the workshop here : http://agile2008toronto.pbwiki.com/Styles-of-TDD

Posted in Agile, Conference, Design, Testing | No Comments »

Saturday, August 9th, 2008

Recently I came across a very successful company which builds infrastructure related servers. The company has 13 people. I spent some time watching how the company works. What really caught my attention was, they were not using TDD. Forget TDD, they did not even have any concept of Tests or Testers in the company. On an average, they had a release cycle of 3 weeks and they would throw away their products every 3 months. Zero time was spent on refactoring and maintaining existing products.

Their business is in such a market that new technology and new ways of doing stuff keeps coming up every 2-3 months. So either they can take their existing products and enhance them to add the new features or they can take the latest product in the market and tailor it to their needs. Usually a lot of their products are built on open source products. In some cases they just hire the open source author and asked him/her to customize the open source product so that this company can really adapt it to their needs.

Not always they find open source products out there. In those cases, the company would form 3 teams of 3 developers each and basically ask each team to build the same product with whatever their choice of technology was. Which ever team finishes first, would release the product. When the second team was done, they would compare the first one with the second one. If the second one was better on various parameters that mattered to the company, they would throw away the first one and release the second one.

According to the founder of the company throwing away stuff was cheaper than enhancing the existing one.

In general TDD and Refactoring are great practices to have on a team, but don’t be dogmatic about it.

Posted in Agile | 3 Comments »

|