`

| |

|

Archive for the ‘Planning’ Category

Saturday, August 1st, 2015

In software world we call this speculative generality or YAGNI or over engineering.

IMHO we should not be afraid to throw parts of your system out every 6-8 months and rebuild it. Speed and Simplicity trumps everything! Also in 6-8 months, new technology, your improved experience, better clarity, etc. will help you design a better solution than what you can design today.

Just to be clear, I’m not suggesting that we do a sloppy job and rewrite the system because of sloppiness. I’m recommending, let’s solve today’s problem in the simplest, cleanest and most effective/efficient way. Let’s not pretend to know the future and build things based on that, because none of us know what the future looks like. Best we can do is guess the future, and we humans are not very good at it.

Posted in Agile, Design, Planning, Product Development, Programming | No Comments »

Saturday, May 26th, 2012

Good Product Owners are:

- Visionary

- Can come up with a product vision which motivates, inspires and drives the team

- Aligns the product vision with company’s vision or mission

- Passionate Problem Solver

- Should have a knack of identifying real problems and ability to visualize a simple solution to those problems.

- Has good analytical & problem solving skills

- Subject Matter Expert

- Understands the domain well enough to envision a product to solve crux of the problem

- Able to answer questions regarding the domain for those creating the product

- End User Advocate

- Empathetic to end-users problems and needs

- Able to describe the product from an end-user’s perspective. Requires a deep understanding of users and use

- Is passionate about great user experience

- Customer Advocate

- Understands the needs of the business buying the product

- Ability to select a mix of features valuable to various different customers

- Business Advocate

- Can identify the business value and synthesize the business strategy as measurable product goals

- Has a good grasp of various business/revenue models and pricing strategies

- Capable of segmenting the market, sizing it and positioning a product (articulate the Unique Selling Proposition)

- Is good at competitive analysis and competitor profiling

- Able to create a product launch strategy

- Communicator

- Capable of communicating vision and intent – deferring detailed feature and design decisions to be made just in time

- Decision Maker

- Given a variety of conflicting goals and opinions, be the final decision maker for hard product decisions

- Designer

- Possess a deep understanding of (product) design thinking

- Able to work effectively with an evolving product design

- Planner

- Given the vision, should be able to work with the team to break it down into an iterative and incremental product plan

- Capable of creating a release roadmap with meaningful release goals

- Is feedback driven .i.e. very keen to inspect and adapt based on feedback

- Collaborator

- Able to work collaboratively with different roles to fulfill the product vision. Be inclusive and empathetic to the difficulties faced by the members of the cross-functional team

- Given all the different stakeholders should be able to balance their needs and priorities

- Empowers the team and encourages everyone to try new ideas and innovate

Disclaimer: This list is based on my personal experience but originally inspired by discussions with Jeff Patton.

In my experience its hard (not impossible) to find someone who possess all these skills. It requires years of hands-on experience.

Some companies form a Product Ownership team, comprising of different people, who can collectively bring these skills to the table. Personally I prefer supporting one person to gradually build these skills.

I amazed how easily companies get convinced that they can send their employees to a 2-day class on Product Ownership and acquire all these skills to be a certified Product Owner.

Posted in Agile, Lean Startup, Organizational, Planning, Product Development | 2 Comments »

Tuesday, March 27th, 2012

Many friends responded to my previous post on How Much Should You Think about the Long-Term? saying:

Even if the future is uncertain and we know it will change, we should always plan for the long-term. Without a plan, we cease to move forward.

I’m not necessarily in favor or against this philosophy. However I’m concerned about the following points:

- Effort: The effort required to put together an initial direction is very different from the effort required to put a (proper) plan together. Is that extra effort really worth it esp. when we know things will change?

- Attachment: Sometimes we get attached with our plans. Even when we see things are not quite inline with our plan, we think, its because we’ve not given enough time or we’ve not done justice to it.

- Conditioned: Sometimes I notice that when we have a plan in place, knowingly or unknowingly we stop watching out for certain things. Mentally we are at ease and we build a shield around us. We get in the groove of our plan and miss some wonderful opportunities along the way.

The amount of planning required seems to be directly proportional to the size of your team.

If your team consists of a couple of people, you can go fairly lightweight. And that’s my long-term plan to deal with uncertainty.

Posted in Agile, Analysis, Cognitive Science, Learning, Organizational, Planning | 2 Comments »

Tuesday, March 27th, 2012

Often people tell you that “You should think about the long-term.”

Sometimes people tell you, “Forget long-term, its too vague, but you should at least think beyond the near-term.”

Really?

Unfortunately, part of my brain (prefrontal cortex), which can see and analyze the future, has failed to develop compared to the other smart beings.

At times, I try to fool myself saying I can anticipate the future, but usually when I get there (future) its quite different. I realize that the way I think about the future is fundamentally flawed. I take the present and fill it with random guesses about something that might happen. But I always miss things that I’m not aware of or not exposed to.

In today’s world, when there are a lot of new ideas/stuff going around us, I’m amazed how others can project themselves into the future and plan their long-terms?

Imagine a tech-company planning their long-term plan, 5-years ago, when there were no iPads/tablets. They all must have guessed a tablet revolution and accounted that in their long-term plans. Or even if they did not, it would have been easy for them to embrace it right?

You could argue that the tablet revolution is a one-off phenomenon or an outlier. Generally things around here are very predictable and we can plan our long-term without an issue. Global economics, stability of government, rules and regulations, emergence of new technologies, new markets, movement of people, changes in their aspirations, environmental issues, none of these impact us in any way.

Good for you! Unfortunately I don’t live in a world like that (or at least don’t fool myself thinking about the world that way.)

By now, you must be aware that we live in a complex adaptive world and we humans ourselves are complex adaptive system. In complex adaptive system, the causality is retrospectively coherent. .i.e. hindsight does not lead to foresight.

10 years ago, when I first read about YAGNI and DTSTTCPW, I thought that was profound. It was against the common wisdom of how people designed software. Software development has come a long way since then. But is XP the best way to build software? Don’t know. Question is, people who used these principles did they build great systems? Answer is: Yes, quite a few of them.

In essence, I think one should certainly think about the future, make reasonable (quick) guesses and move on. Key is to always keep an open mind and listen to subtle changes around us.

One cannot solely rely on their “intuition” about long term. Arguing on things you’ve all only guessed seems like a huge waste of time and effort. Remember there is a point of diminishing returns and more time you spend thinking long-term, less your chances are of getting it right.

I’m a strong believer of “Action Precedes Clarity!”

Update: In response to many comments: When the Future is Uncertain, How Important is A Long-Term Plan?

Posted in Agile, Analysis, Cognitive Science, Learning, Organizational, Planning | 1 Comment »

Tuesday, November 1st, 2011

Many product companies struggle with a big challenge: how to identify a Minimal Viable Product that will let them quickly validate their product hypothesis?

Teams that share the product vision and agree on priorities for features are able to move faster and more effectively.

During this workshop, we’ll take a hypothetical product and coach you on how to effectively come up with an evolutionary roadmap for your product.

This day long workshop teaches you how to collaborate on the vision of the product and create a Product Backlog, a User Story map and a pragmatic Release Plan.

Detailed Activity Breakup

- PART 1: UNDERSTAND PRODUCT CONTEXT

- Introduction

- Define Product Vision

- Identify Users That Matter

- Create User Personas

- Define User Goals

- A Day-In-Life Of Each Persona

- PART 2: BUILD INITIAL STORY MAP FROM ACTIVITY MODEL

- Prioritize Personas

- Break Down Activities And Tasks From User Goals

- Lay Out Goals Activities And Tasks

- Walk Through And Refine Activity Model

- PART 3: CREATE FIRST-CUT PRODUCT ROAD MAP

- Prioritize High Level Tasks

- Define Themes

- Refine Tasks

- Define Minimum Viable Product

- Identify Internal And External Release Milestones

- PART 4: WRITE USER STORIES FOR THE FIRST RELEASE

- Define User Task Level Acceptance Criteria

- Break Down User Tasks To User Stories Based On Acceptance Criteria

- Refine Acceptance Criteria For Each Story

- Find Ways To Further Thin-Slice User Stories

- Capture Assumptions And Non-Functional Requirements

- PART 5: REFINE FIRST INTERNAL RELEASE BASED ON ESTIMATES

- Define Relative Size Of User Stories

- Refine Internal Release Milestones For First-Release Based On Estimates

- Define Goals For Each Release

- Refine Product And Project Risks

- Present And Commit To The Plan

- PART 6: RETROSPECTIVE

- Each part will take roughly 30 mins.

I’ve facilitated this workshop for many organizations (small-startups to large enterprises.)

More details: Product Discovery Workshop from Industrial Logic

Techniques

Focused Break-Out Sessions, Group Activities, Interactive Dialogues, Presentations, Heated Debates/Discussions and Some Fun Games

Target Audience

- Product Owner

- Release/Project Manager

- Subject Matter Expert, Domain Expert, or Business Analyst

- User Experience team

- Architect/Tech Lead

- Core Development Team (including developers, testers, DBAs, etc.)

This tutorial can take max 30 people. (3 teams of 10 people each.)

Workshop Prerequisites

Required: working knowledge of Agile (iterative and incremental software delivery models) Required: working knowledge of personas, users stories, backlogs, acceptance criteria, etc.

Testimonials

“I come away from this workshop having learned a great deal about the process and equally about many strategies and nuances of facilitating it. Invaluable!

Naresh Jain clearly has extensive experience with the Product Discovery Workshop. He conveyed the principles and practices underlying the process very well, with examples from past experience and application to the actual project addressed in the workshop. His ability to quickly relate to the project and team members, and to focus on the specific details for the decomposition of this project at the various levels (goals/roles, activities, tasks), is remarkable and a good example for those learning to facilitate the workshop.

Key take-aways for me include the technique of acceptance criteria driven decomposition, and the point that it is useful to map existing software to provide a baseline framework for future additions.”

Doug Brophy, Agile Expert, GE Energy

Learning outcomes

- Understand the thought process and steps involved during a typical product discovery and release planning session

- Using various User-Centered Design techniques, learn how to create a User Story Map to help you visualize your product

- Understand various prioritization techniques that work at the Business-Goal and User-Persona Level

- Learn how to decompose User Activities into User Tasks and then into User Stories

- Apply an Acceptance Criteria-Driven Discovery approach to flush out thin slices of functionality that cut across the system

- Identify various techniques to narrow the scope of your releases, without reducing the value delivered to the users

- Improve confidence and collaboration between the business and engineering teams

- Practice key techniques to work in short cycles to get rapid feedback and reduce risk

Posted in Agile, agile india, Analysis, Coaching, Conference, Design, Lean Startup, Planning, Product Development, Training | No Comments »

Monday, April 18th, 2011

Building on top of my previous blog entry: Version Control Branching (extensively) Considered Harmful

I always discourage teams from preemptively branching a release candidate and then splitting their team to harden the release while rest of the team continues working on next release features.

My reasoning:

- Increases the work-in-progress and creates a lot of planning, management, version-control, testing, etc. overheads.

- In the grand scheme of things, we are focusing on resource utilization, but the throughput of the overall system is actually reducing.

- During development, teams get very focused on churning out features. Subconsciously they know there will be a hardening/optimization phase at the end, so they tend to cut corners for short-term speed gains. This attitude had a snowball effect. Overall encourages a “not-my-problem” attitude towards quality, performance and overall usability.

- The team (developers, testers and managers) responsible for hardening the release have to work extremely hard, under high pressure causing them to burn-out (and possibly introducing more problems into the system.) They have to suffer for the mistakes others have done. Does not seem like a fair system.

- Because the team is under high pressure to deliver the release, even though they know something really needs to be redesigned/refactored, they just patch it up. Constantly doing this, really creates a big ball of complex mud that only a few people understand.

- Creates a “Knowledge/Skill divide” between the developers and testers of the team. Generally the best (most trusted and knowledgable) members are pick up to work on the release hardening and performance optimization. They learn many interesting things while doing this. This newly acquired knowledge does not effectively get communicate back to other team members (mostly developers). Others continue doing what they used to do (potentially wrong things which the hardening team has to fix later.)

- As releases pass by, there are fewer and fewer people who understand the overall system and only they are able to effectively harden the project. This is a huge project risk.

- Over a period of time, every new release needs more hardening time due to the points highlighted above. This approach does not seem like a good strategy of getting out of the problem.

If something hurts, do it all the time to reduce the pain and get better at it.

Hence we should build release hardening as much as possible into the routine everyday work. If you still need hardening at the end, then instead of splitting the teams, we should let the whole swamp on making the release.

Also usually I notice that if only a subset of the team can effectively do the hardening, then its a good indication that the team is over-staffed and that might be one of the reasons for many problems in the first place. It might be worth considering down-sizing your team to see if some of those problems can be addressed.

Posted in Agile, Continuous Deployment, Learning, Organizational, Planning, Programming, Testing | No Comments »

Sunday, March 6th, 2011

“I’m going shopping, can you please give me the details of everything you’ll need for the next year?”

What if I asked you this question?

Don’t just throw the mouse at me yet. You look extremely annoyed, but indulge me for a minute. Do you have any idea how much more you might spend because of your lack of planning? I’m sure when you run out of things, you wish you had planned better. After all good upfront planning is always helpful. Its Industry BEST PRACTICE.

Let’s assume, you are convinced with my logical reasoning and well-polished methodological approach to planning. You start creating a backlog of items you’ll need over the next year. And you start filling out the details for each item in a nifty little template I’ve given you. Of course its taking a lot longer than you imagined, but you are discovering (at least forced to think about) many things you had never thought about.

By the way, at the end of this exercise you’ll need to hand over a signed list to me and you can’t change you mind later. We don’t entertain change requests later as its more expensive. After all, we need to put some constraints to make are planning effective.

What, when, how, how much, etc. all kinds of interesting questions plague your mind. Making you realize how unplanned and clueless you were.

Perseverance always wins, in the end. Finally you have a backlog of items you’ll need for the year.

OK. Cut! Lights!

I bet one out of 2 things about the list of items:

- The list was very ambitious (massively grandiose.) You fantasied every possible thing you might ever need, just in case. (After all, what is the guarantee you’ll get everything you asked for.)

- You came up with a very humble list and since you won’t be able to change it cheaply, you regret now for indulging me.

Either ways its bad news for you.

This is exactly what happens on many software projects. Right at the beginning of the project, people who need the software (users or product management) are forced to come up with a detail spec of everything they need from the software. With a higher price tag for late changes. Which forces them to fantasize everything they might remotely need. After all, they are not sure what really will be required once they have the software year or two later.

The development team gets a pile of stuff with different priorities and importance, but all mixed up.

The team tries to come up with a grandiose vision and architect for the project in the name of extensibility.

Eventually, couple of years from now, somehow if the team manages to deliver the product:

- Its bound to be off target.

- Users will force the team to add new, unplanned features which are very critical for the usability of the product.

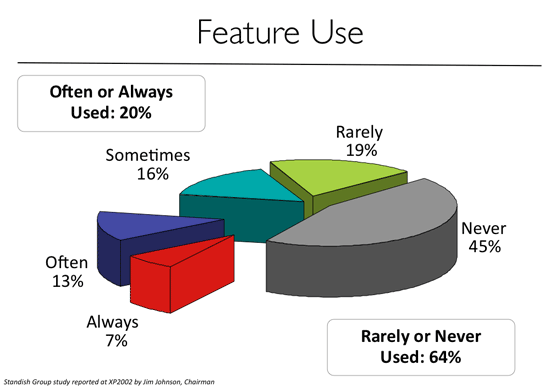

- A good 80% of the features are rarely used or never used.

- Those 80% of the features contribute to majority of the bugs and complexity in the software

- The overall product feels like a hotchpotch of “stuff”. Lack of symmetry and conceptual integrity

What you really have is a prototype that the team is ready to discard and start over again.

I call this phenomenon The Window of Opportunity. One opportunity, at the beginning, to express what you want. Take your best guess.

We can do much better than this. I would prefer to start really small, very focused. Use Agile methods to build the product collaboratively using an iterative AND incremental model. Embrace evolutionary design and architecture.

The Window of Opportunity *might* sound good in theory, but its too risky.

Posted in Agile, Planning | 1 Comment »

Sunday, March 6th, 2011

Recently TV tweeted saying:

Is “measure twice, cut once” an #agile value? Why shouldn’t it be – it is more fundamental than agile.

To which I responded saying:

“measure twice, cut once” makes sense when cost of a mistake & rework is huge. In software that’s not the case if done in small, safe steps. A feedback centric method like #agile can help reduce the cost of rework. Helping you #FailFast and create opportunities for #SafeFailExperiements. (Extremely important for innovation.)

To step back a little, the proverb “measure twice and cut once” in carpentry literally mean:

“One should double-check one’s measurements for accuracy before cutting a piece of wood; otherwise it may be necessary to cut again, wasting time and material.”

Speaking more figuratively it means “Plan and prepare in a careful, thorough manner before taking action.”

Unfortunately many software teams literally take this advice as

“Let’s spend a few solid months carefully planning, estimating and designing software upfront, so we can avoid rework and last minute surprise.”

However after doing all that, they realize it was not worth it. Best case they delivered something useful to end users with about 40% rework. Worst case they never delivered or delivered something buggy that does not meet user’s needs. But what about the opportunity cost?

Why does this happen?

Humphrey’s law says: “Users will not know exactly what they want until they see it (may be not even then).”

So how can we plan (measure twice) when its not clear what exactly our users want (even if we can pretend that we understand our user’s needs)?

How can we plan for uncertainty?

IMHO you can’t plan for uncertainty. You respond to uncertainty by inspecting and adapting. You learn by deliberately conducting many safe-fail experiments.

What is Safe-Fail Experimentation?

Safe-fail experimentation is a learning and problem solving technique which emphasizes on conducting many simultaneous, small, controlled experiments with small variations. Since these are small controlled experiments, failure is an expected & acceptable outcome.

In the software world, spiking, low-fi-prototypes, set-based design, continuous deployment, A/B Testing, etc. are all forms of safe-fail experiments.

Generally we like to start with something really small (but end-to-end) and rapidly build on it using user feedback and personal experience. Embracing Simplicity (“maximizing the amount of work not done”) is critical as well. You frequently cut small pieces, integrate the whole and see if its aligned with user’s needs. If not, the cost of rework is very small. Embrace small #SafeFail experiments to really innovate.

Or as Kerry says:

“Perhaps the fundamental point is that in software development the best way of measuring is to cut.”

Also strongly recommend you read the Basic principles of safe-fail experimentation.

Posted in Agile, Continuous Deployment, Planning | No Comments »

Saturday, December 4th, 2010

Every day I hear horror stories of how developers are harassed by managers and customers for not having predictable/stable velocity. Developers are penalized when their estimates don’t match their actuals.

If I understand correctly, the reason we moved to story points was to avoid this public humiliation of developers by their managers and customers.

Its probably helped some teams but vast majority of teams today are no better off than before, except that now they have this one extract level of indirection because of story points and then velocity.

We can certainly blame the developers and managers for not understanding story points in the first place. But will that really solve the problem teams are faced with today?

Please consider reading my blog on Story Points are Relative Complexity Estimation techniques. It will help you understand what story points are.

Assuming you know what story point estimates are. Let’s consider that we have some user stories with different story points which help us understand relative complexity estimate.

Then we pick up the most important stories (with different relative complexities) and try to do those stories in our next iteration/sprint.

Let’s say we end up finishing 6 user stories at the end of this iteration/sprint. We add up all the story points for each user story which was completed and we say that’s our velocity.

Next iteration/sprint, we say we can roughly pick up same amount of total story points based on our velocity. And we plan our iterations/sprints this way. We find an oscillating velocity each iteration/sprint, which in theory should normalize over a period of time.

But do you see a fundamental error in this approach?

First we said, 2-story points does not mean 2 times bigger than 1-story point. Let’s say to implement a 1-point story it might take 6 hrs, while to implement a 2-point story it takes 9 hrs. Hence we assigned random numbers (Fibonacci series) to story points in the first place. But then we go and add them all up.

If you still don’t get it, let me explain with an example.

In the nth iteration/sprint, we implemented 6 stories:

- Two 1-point story

- Two 3-point stories

- One 5-point story

- One 8-point story

So our total velocity is ( 2*1 + 2*3 + 5 + 8 ) = 21 points. In 2 weeks we got 21 points done, hence our velocity is 21.

Next iteration/sprit, we’ll take:

* Twenty One 1-point stories

Take a wild guess what would happen?

Yeah I know, hence we don’t take just one iteration/sprint’s velocity, we take an average across many iterations/sprints.

But its a real big stretch to take something which was inherently not meant to be mathematical or statistical in nature and calculate velocity based on it.

If velocity anyway averages out over a period of time, then why not just count the number of stories and use them as your velocity instead of doing story-points?

Over a period of time stories will roughly be broken down to similar size stories and even if they don’t, they will average out.

Isn’t that much simpler (with about the same amount of error) than doing all the story point business?

I used this approach for few years and did certainly benefit from it. No doubt its better than effort estimation upfront. But is this the best we can do?

I know many teams who don’t do effort estimation or relative complexity estimation and moved to a flow model instead of trying to fit thing into the box.

Consider reading my blog on Estimations Considered Harmful.

Posted in Agile, Analysis, Planning | 9 Comments »

Saturday, July 10th, 2010

A prioritized user story backlog helps to understand what to do next, but is a difficult tool for understanding what your whole system is intended to do. A user story map arranges user stories into a useful model to help understand the functionality of the system, identify holes and omissions in your backlog, and effectively plan holistic releases that delivery value to users and business with each release.

Posted in Agile, agile india, Analysis, Coaching, Planning, post modern agile, Product Development | 2 Comments »

|