`

| |

|

Archive for the ‘Tips’ Category

Sunday, June 28th, 2015

If you are using Opauth-Twitter and suddenly you find that the Twitter OAuth is failing on OS X Yosemite, then it could be because of the CA certificate issue.

In OS X Yosemite 10.10, they switched cURL’s version from 7.30.0 to 7.37.1 [curl 7.37.1 (x86_64-apple-darwin14.0) libcurl/7.37.1 SecureTransport zlib/1.2.5] and since then cURL always tries to verify the SSL certificate of the remote server.

In the previous versions, you could set curl_ssl_verifypeer to false and it would skip the verification. However from 7.37, if you set curl_ssl_verifypeer to false, it complains “SSL: CA certificate set, but certificate verification is disabled”.

Prior to version 0.60, tmhOAuth did not come bundled with the CA certificate and we used to get the following error:

SSL: can’t load CA certificate file <path>/vendor/opauth/Twitter/Vendor/tmhOAuth/cacert.pem

You can get the latest cacert.pem from here http://curl.haxx.se/ca/cacert.pem and saving it under /Vendor/tmhOAuth/cacert.pem (Latest version of tmbOAuth already has this in their repo.)

And then we need to set the $defaults (Optional parameters) curl_ssl_verifypeer to true in TwitterStrategy.php on line 48.

P.S: Turning off curl_ssl_verifypeer is actually a bad security move. It can make your server vulnerable to man-in-the-middle attack.

Posted in Deployment, Open Source, Tips | No Comments »

Friday, October 3rd, 2014

Many teams suffer daily due to slow CI builds. The teams certainly realise the pain, but don’t necessarily take any corrective action. The most common excuse is we don’t have time or we don’t think it can get better than this.

Following are some key principles, I’ve used when confronted with long running builds:

- Focus on the Bottlenecks – Profile your builds to find the real culprits. Fixing them will help the most. IMHE I’ve seen the 80-20 rule apply here. Fixing 20% of the bottlenecks will give you 80% gain in speed.

- Divide and Conquer – Turn large monolithic builds into smaller, more focused builds. This would typically lead to restructuring your project into smaller modules or projects, which is a good version control practice anyway. Also most CI servers support a build pipeline, which will help you hookup all these smaller builds together.

- Turn Sequential Tasks to Parallel Tasks – By breaking your builds into smaller builds, you can now run them in parallel. You can also distribute the tasks across multiple slave machines. Also consider running your tests in parallel. Many static analysis tools can run in parallel.

- Reuse – Don’t create/start from scratch if you can avoid it. For ex: have pre-compiled code (jars) for dependent code instead of building it every time, esp. if it rarely changes. Set up your target env as a VM and keep it ready. Use a database dump for your seed data, instead of building it from an empty DB every time. Many times we use incremental compile/build, instead of clean builds.

- Avoid/Minimise IO (Disk & Network) – IOs can be a huge bottleneck. Turn down logging when running your builds. Preference using an in-process & in-memory DB, consider tmpfs for in-memory file system.

- Fail Fast – We want our builds to give us fast feedback. Hence its very important to prioritise your build tasks based on what is most likely to fail first. In fact long back we had started a project called ProTest, which helps you prioritise your tests on which test is most likely to fail.

- Push unnecessary stuff to a separate build – Things like JavaDocs can be done nightly

- Once and Only Once – avoid unnecessary duplication in steps. For ex: copying src files or jars to another location, creating a new Jenkins workspace every build, empty DB creation, etc.

- Reduce Noise – remove unnecessary data and file. Work on a minimal, yet apt set. Turn down logging levels.

- Time is Money -I guess I’m stating the obvious. But using newer, faster tools is actually cheaper. Moving from CVS/SVN to Git can speed up your build, newer testing frameworks are faster. Also Hardware is getting cheaper day by day, while developer’s cost is going up day by day. Invest in good hardware like SSD, Faster Multi-core CPUs, better RAM, etc. It would be way cheaper than your team waiting for the builds.

- Profile, Understand and Configure – Ignorance can be fatal. When it comes to build, you must profile your build to find the bottleneck. Go deeper to understand what is going on. And then based on data, configure your environment. For ex: setting the right OS parameters, set the right compiler flags can make a noticeable difference.

- Keep an Open Mind – Many times, you will find the real culprits might be some totally unrelated part of your environment. Many times we also find poorly written code which can slow things down. One needs to keep an open mind.

Are there any other principles you’ve used?

BTW Ashish and I plan to present this topic at the upcoming Agile Pune 2014 Conference. Would love to see you there.

Posted in Continuous Deployment, Tips | 4 Comments »

Wednesday, October 9th, 2013

Its pretty common in webapps to use Crontab to check for certain thresholds at regular intervals and send notifications if the threshold is crossed. Typically I would expose a secret URL and use WGet to invoke that URL via a cron.

The following cron will invoke the secret_url every hour.

0 */1 * * * /usr/bin/wget "http://nareshjain.com/cron/secret_url" |

0 */1 * * * /usr/bin/wget "http://nareshjain.com/cron/secret_url" Since this is running as a cron, we don’t want any output. So we can add the -q and –spider command line parameters. Like:

0 */1 * * * /usr/bin/wget -q --spider "http://nareshjain.com/cron/secret_url" |

0 */1 * * * /usr/bin/wget -q --spider "http://nareshjain.com/cron/secret_url" –spider command line parameter is very handy, it is used for a Dry-run .i.e. check if the URL actually exits. This way you don’t need to do things like:

wget -q "http://nareshjain.com/cron/secret_url" -O /dev/null |

wget -q "http://nareshjain.com/cron/secret_url" -O /dev/null But when you run this command from your terminal:

wget -q --spider "http://nareshjain.com/cron/secret_url"

Spider mode enabled. Check if remote file exists.

--2013-10-09 09:05:25-- http://nareshjain.com/cron/secret_url

Resolving nareshjain.com... 223.228.28.190

Connecting to nareshjain.com|223.228.28.190|:80... connected.

HTTP request sent, awaiting response... 404 Not Found

Remote file does not exist -- broken link!!! |

wget -q --spider "http://nareshjain.com/cron/secret_url"

Spider mode enabled. Check if remote file exists.

--2013-10-09 09:05:25-- http://nareshjain.com/cron/secret_url

Resolving nareshjain.com... 223.228.28.190

Connecting to nareshjain.com|223.228.28.190|:80... connected.

HTTP request sent, awaiting response... 404 Not Found

Remote file does not exist -- broken link!!! You use the same URL in your browser and sure enough, it actually works. Why is it not working via WGet then?

The catch is, –spider sends a HEAD HTTP request instead of a GET request.

You can check your access log:

my.ip.add.ress - - [09/Oct/2013:02:46:35 +0000] "HEAD /cron/secret_url HTTP/1.0" 404 0 "-" "Wget/1.11.4" |

my.ip.add.ress - - [09/Oct/2013:02:46:35 +0000] "HEAD /cron/secret_url HTTP/1.0" 404 0 "-" "Wget/1.11.4" If your secret URL is pointing to an actual file (like secret.php) then it does not matter, you should not see any error. However, if you are using any framework for specifying your routes, then you need to make sure you have a handler for HEAD request instead of GET.

Posted in Hosting, HTTP, Tips, Tools | No Comments »

Saturday, March 23rd, 2013

If you are building a web-app, which needs to use OAuth for user authentication across Facebook, Google, Twitter and other social media, testing the app locally, on your development machine, can be a real challenge.

On your local machine, the app URL might look like http://localhost/my_app/login.xxx while in the production environment the URL would be http://my_app.com/login.xxx

Now, when you try to test the OAuth integration, using Facebook (or any other resource server) it will not work locally. Because when you create the facebook app, you need to give the URL where the code will be located. This is different on local and production environment.

So how do you resolve this issue?

One way to resolve this issue is to set up a Virtual Host on your machine, such that your local environment have the same URL as the production code.

To achieve this, following the 4 simple steps:

1. Map your domain name to your local IP address

Add the following line to /etc/hosts file

127.0.0.1 my_app.com

Now when you request for http://my_app.com in your browser, it will direct the request to your local machine.

2. Activate virtual hosts in apache

Uncomment the following line (remove the #) in /private/etc/apache2/httpd.conf

#Include /private/etc/apache2/extra/httpd-vhosts.conf

3. Add the virtual host in apache

Add the following VHost entry to the /private/etc/apache2/extra/httpd-vhosts.conf file

<VirtualHost *:80>

DocumentRoot "/Users/username/Sites/my_app"

ServerName my_app.com

</VirtualHost> |

<VirtualHost *:80>

DocumentRoot "/Users/username/Sites/my_app"

ServerName my_app.com

</VirtualHost> 4. Restart Apache

System preferences > “Sharing” > Uncheck the box “Web Sharing” – apache will stop & then check it again – apache will start.

Now, http://my_app.com/login.xxx will be served locally.

Posted in Deployment, Hosting, HTTP, Tips | No Comments »

Saturday, January 5th, 2013

Posted in Agile, Design, Tips, Tools | 1 Comment »

Wednesday, November 21st, 2012

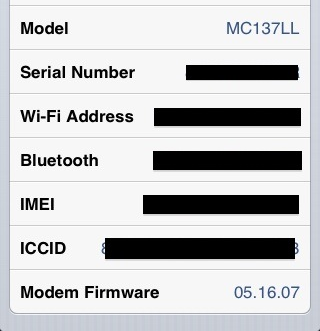

Over the years, I bought many iPhones for family and friends from US. Only for the last 2 Years, Apple has been selling unlocked phones. Before that, for most phones, I had to figure out a way to jailbreak and carrier unlock the phone via Ultrasn0w.

For iPhone 3Gs, to carrier unlock your phone, I had to upgrade my phone’s baseband to 6.15.00 (iPad’s baseband) and then using Ultrasn0w I could use the phone with any service provider in India.

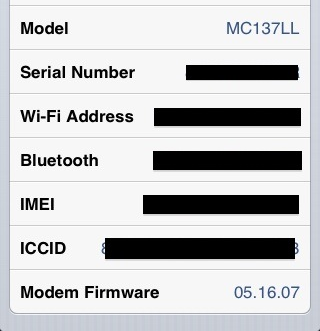

However for iPhone 4 with baseband 4.11.08, Ultrasn0w could not do the carrier unlock. Basically there was no way to upgrade/downgrade the baseband. I had given up hope and my daughter was happily using those iPhones as toys. Just then I came across BejingPhoneRepair’s IMEI Unlocking Steps. I was very skeptical that this would work. Paid $15 USD for 1 phone and it worked like a charm. I was able to unlock all my iPhone4’s.

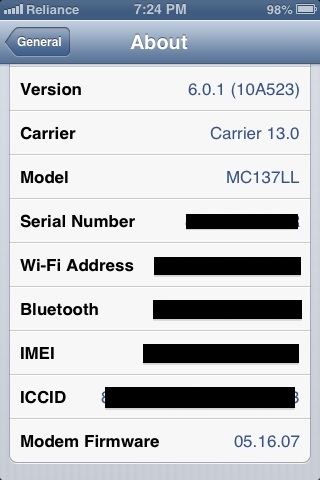

Then I had this iPhone 3Gs, which I had jail broken and upgraded the baseband to 6.15.00. I wanted to upgrade that to iOS 6.0.1. So I thought I might as well use the same IMEI unlock. After I paid and followed the exact same steps I did for iPhone4, I realized there was an issue:

It refused to recognize the SIM card. Then I came across this article from Richard Ker of BeijingiPhoneRepair. This explains that iTunes does not let you activate your iPhone 3Gs with baseband 6.15.00 on iOS 6.

The solution they propose is to downgrade your baseband to 5.13.04 first. To downgrade your baseband you can get the original iPhone 3GS iOS 5.1.1 firmware and then using Redsn0w to flash the baseband. Since I already had iOS 6.0.1 installed on my phone, I kept getting the following error:

AppleBaseband: Could not find mux function error.

Tried downgrading my phone to iOS 5.1.1 using Redsn0w and iTunes, but no luck. Apple does not allow you to downgrade from iOS 6 to iOS 5. You need the SHSH blobs saved for iOS 5.1.1 to downgrade as Apple has stopped signing this firmware. Using Redsn0w I tried searching Cydia’s server to see if SHSH blobs were available. No luck. So I followed the following steps to fix the issue:

- Using Redsn0w (0.9.14b2 or above), I put my phone in DFU mode.

- During the restore process, iTunes verifies with Apple’s server if the device is allowed to install the specific version of firmware. To work around this issue, I appended the following line 74.208.105.171 gs.apple.com to my host file (/etc/hosts) to fool iTunes.

- Then using iTunes, restored iOS 4.1 (8B117) firmware on my phone. I usually download my firmwares from iClarified’s site.

- After Launching redsn0w. Under Extras > Select IPSW and select the original iOS 4.1 (8B117) firmware

- Did a controlled shutdown of my iPhone (“slide to power off”).

- Returned to the first screen and clicked ‘Jailbreak’.

- Checked the ‘Downgrade from iPad baseband’ checkbox and unchecked Cydia. Next.

- Redsn0w started the downgrade process and you finally I saw the ‘Flashing Baseband’ screen on my iPhone with the Pawnapple icon. DO NOT INTERRUPT your iPhone while baseband flashing is in progress. This steps takes a good 5-10 mins.

- When this was done, after rebooting my iPhone, the baseband was downgraded to 5.13.04.

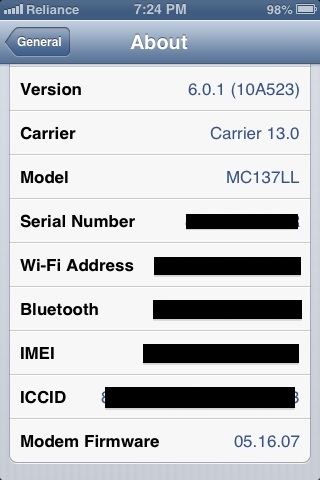

- Then I connected my phone to iTunes once more and upgraded my firmware to iOS 6.0.1.

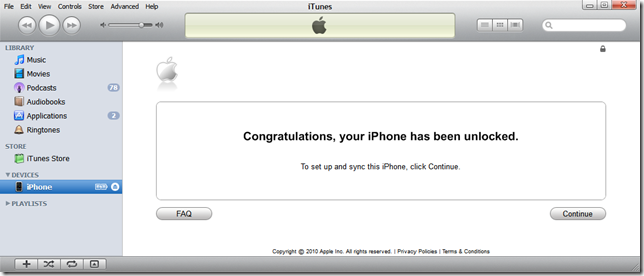

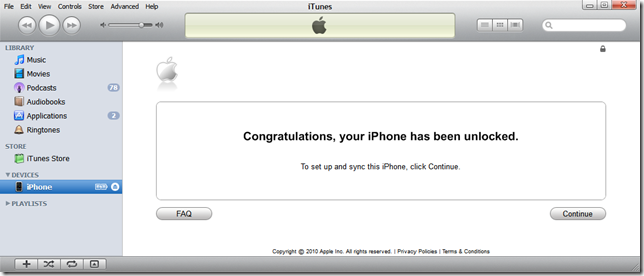

- Once the upgrade was done, I saw this screen on iTunes.

- Now my phone can connect to the local service provider.

Posted in Hacking, iPhone, Tips | 77 Comments »

Saturday, October 20th, 2012

For frequent emails, I continue to see some people still using large number of people’s email address in the To or CC field instead of creating a group. For example, the society I stay in, has around 200 houses. And the emails between the society members use this approach.

Their workflow goes something like this: Dig thru past emails looking for everyone’s email address, validate if the email address is still valid and then send an email to all of them hoping it will reach everyone and won’t end up in their spam folders. (If you email a large number of people, there is a very high probability that your email will end up in the spam folder.)

In general, the approach highlighted above is considered a bad practice. Below are few key issues with the way we are currently emailing:

- Anytime we’ve a new members, it hard for them to get on this list. Even if one person adds them to the list, we are not sure if everyone will include them in future emails.

- Also new members don’t have access to any of the previous emails. So we tend to see sometimes repeated emails coming again.

- Its hard to follow these email chains. It not clear who has responded to who and when. One has to go dig thru emails to find out the timeline.

- Many times emails bounce coz you’ve got the wrong email address. There is no easy way to maintain validated email addresses. Many times copying and pasting email address can also cause these problems.

- Its hard to categorize these email. I would be interested in only certain kind of emails. But emails don’t allow me to tag or categorize them easily.

- I have no control when these emails land in my inbox. For ex. I would like to see these email as a digest at the end of the day. Few of these mails are important, however most of them are FYI emails or complain email or just pure noise.

- People have no control over which email address gets used. For example, I get email on 2 email accounts and I can’t really do anything about it, other than request people to drop me from there list. Basically I’ve no control over this.

- Last but not the least, a privacy issue. I don’t want my email id floating around on email chains.

To avoid this problem, its best to create specific group or mailing list and request people to join the respective group. This way going forward, if you want to email everyone, just compose your email and send it to the group’s email address. No need to remember everyone’s email ids. New people can just join this list. Members can update their email address and personal email preferences, no problem.

Posted in Tips | No Comments »

Monday, July 30th, 2012

Recently I had the “pleasure” of upgrading from CMSMS 1.9.3 to 1.10.3.

- Downloaded the cmsmadesimple-1.10.3-full.tar.gz

- Unzipped it overwriting some of the existing files from the older version (1.9.3) [tar -xvf cmsmadesimple-1.10.3-full.tar.gz -C my_existing_site_installation_folder]

- Ran the upgrade script by opening http://my-site.com/install/upgrade.php

I was constantly getting stuck at step 3, it was complaining:

Fatal error: Call to undefined method cms_config :: save () in /install/lib/classes/CMSUpgradePage3.class.php on line 30

Digging around a little bit realized cms_config is no longer available.

Then tried downloading cmsmadesimple-1.9.4.3-full.tar.gz

Luckily this time I was able to go past step 3 without any problem.

So now I was on version 1.9.4.3, but I wanted to get to 1.10.3. So

- As per their advice, upgraded all my modules to the latest version

- Downloaded cmsmadesimple-1.10.3-full.tar.gz,

- Copied its contents

- Tried to run the upgrade script.

Everything went fine, it even updated my database scheme to version 35 successfully. But then when I hit continue on step 6, it was stuck there for ever. Eventually came back with Internal Error 500. Looking at the log file, all I could see is

“2012/07/28 06:28:35 [error] 23816#0: *3319000 upstream timed out (110: Connection timed out) while reading response header from upstream”

Turns out that in 1.10, the CMSMS dev team broke a whole bunch of backward compatibility. In Step 6 of the upgrade, it tries to upgrade and install installed modules. But during this process it just conks out.

Then I tried to uninstall all my modules and run the upgrade script. Abra-kadabra the upgrade went just fine.

- Then I had to go in and install those modules again.

- Also had to update most of the modules to the latest version which is compatible with 1.10.

- And restore the data used by the modules.

Only had I known all of this, it could have saved me a few hours of my precious life.

P.S: Just when I finished all of this, I saw the CMSMS dev team released the latest stable version 1.11

Posted in Deployment, Hosting, Tips | 2 Comments »

Monday, March 26th, 2012

There is something positive to be said about joining teams where you are the worst team member. It stretches you and forces you to grow in ways you would not grow, if left alone.

In my career, I’ve been parts of many teams, where the first few days, I could not comprehend what others were talking. I felt completely stupid and small. But the desire to learn and passion to excel soon presented a day when I felt equal with others. The journey would usually not stop there. Soon I would be leading the discussions.

Mostly when I felt that I was leading the discussions, I realized it was time to move on and join another team where I would feel like the worst band member again.

I certainly think there are other ways to progress in your career, but this attitude has certainly helped me.

Posted in Self Help, Tips | No Comments »

Saturday, October 22nd, 2011

How to destroy a team by introducing various forms of churn?

- Have the Product Management change high-level vision and priority frequently.

- Stop the teams from collaborating with other teams, Architects and important stakeholders.

- Make sure testing and integration is done late in the cycle.

- As soon as a team member gains enough experience on the team move him/her out of the team to play other roles.

- In critical areas of the system, force the team to produce a poor implementation.

- Structure the teams architecturally to ensure there is heavy inter-dependencies between them.

- Very closely monitor team’s commitment and ensure they feel embarrassed for estimating wrongly.

- Ensure the first 15-30 mins of every meeting is spent on useless talk, before getting to the crux of the matter.

- Measure Churn and put clear process in place to minimize churn 😉

Posted in Agile, Coaching, Metrics, Organizational, Tips | 2 Comments »

|