`

| |

|

Archive for the ‘Continuous Deployment’ Category

Friday, October 3rd, 2014

Many teams suffer daily due to slow CI builds. The teams certainly realise the pain, but don’t necessarily take any corrective action. The most common excuse is we don’t have time or we don’t think it can get better than this.

Following are some key principles, I’ve used when confronted with long running builds:

- Focus on the Bottlenecks – Profile your builds to find the real culprits. Fixing them will help the most. IMHE I’ve seen the 80-20 rule apply here. Fixing 20% of the bottlenecks will give you 80% gain in speed.

- Divide and Conquer – Turn large monolithic builds into smaller, more focused builds. This would typically lead to restructuring your project into smaller modules or projects, which is a good version control practice anyway. Also most CI servers support a build pipeline, which will help you hookup all these smaller builds together.

- Turn Sequential Tasks to Parallel Tasks – By breaking your builds into smaller builds, you can now run them in parallel. You can also distribute the tasks across multiple slave machines. Also consider running your tests in parallel. Many static analysis tools can run in parallel.

- Reuse – Don’t create/start from scratch if you can avoid it. For ex: have pre-compiled code (jars) for dependent code instead of building it every time, esp. if it rarely changes. Set up your target env as a VM and keep it ready. Use a database dump for your seed data, instead of building it from an empty DB every time. Many times we use incremental compile/build, instead of clean builds.

- Avoid/Minimise IO (Disk & Network) – IOs can be a huge bottleneck. Turn down logging when running your builds. Preference using an in-process & in-memory DB, consider tmpfs for in-memory file system.

- Fail Fast – We want our builds to give us fast feedback. Hence its very important to prioritise your build tasks based on what is most likely to fail first. In fact long back we had started a project called ProTest, which helps you prioritise your tests on which test is most likely to fail.

- Push unnecessary stuff to a separate build – Things like JavaDocs can be done nightly

- Once and Only Once – avoid unnecessary duplication in steps. For ex: copying src files or jars to another location, creating a new Jenkins workspace every build, empty DB creation, etc.

- Reduce Noise – remove unnecessary data and file. Work on a minimal, yet apt set. Turn down logging levels.

- Time is Money -I guess I’m stating the obvious. But using newer, faster tools is actually cheaper. Moving from CVS/SVN to Git can speed up your build, newer testing frameworks are faster. Also Hardware is getting cheaper day by day, while developer’s cost is going up day by day. Invest in good hardware like SSD, Faster Multi-core CPUs, better RAM, etc. It would be way cheaper than your team waiting for the builds.

- Profile, Understand and Configure – Ignorance can be fatal. When it comes to build, you must profile your build to find the bottleneck. Go deeper to understand what is going on. And then based on data, configure your environment. For ex: setting the right OS parameters, set the right compiler flags can make a noticeable difference.

- Keep an Open Mind – Many times, you will find the real culprits might be some totally unrelated part of your environment. Many times we also find poorly written code which can slow things down. One needs to keep an open mind.

Are there any other principles you’ve used?

BTW Ashish and I plan to present this topic at the upcoming Agile Pune 2014 Conference. Would love to see you there.

Posted in Continuous Deployment, Tips | 4 Comments »

Tuesday, March 19th, 2013

Recently at the Agile India 2013 Conference I ran an introductory workshop on Behavior Driven Development. This workshop offered a comprehensive, hands-on introduction to behavior driven development via an interactive-demo.

Over the past decade, eXtreme Programming practices like Test-Driven Development (TDD) and Behaviour Driven Development (BDD) have fundamentally changed software development processes and inherently how engineers work. Practitioners claim that it has helped them significantly improve their collaboration with business, development speed, design & code quality and responsiveness to changing requirements. Software professionals across the board, from Internet startups to medical device companies to space research organizations, today have embraced these practices.

This workshop explores the foundations of TDD & BDD with the help of various patterns, strategies, tools and techniques.

Posted in Agile, Continuous Deployment, Design, Programming | No Comments »

Tuesday, March 19th, 2013

As more and more companies are moving to the Cloud, they want their latest, greatest software features to be available to their users as quickly as they are built. However there are several issues blocking them from moving ahead.

One key issue is the massive amount of time it takes for someone to certify that the new feature is indeed working as expected and also to assure that the rest of the features will continuing to work. In spite of this long waiting cycle, we still cannot assure that our software will not have any issues. In fact, many times our assumptions about the user’s needs or behavior might itself be wrong. But this long testing cycle only helps us validate that our assumptions works as assumed.

How can we break out of this rut & get thin slices of our features in front of our users to validate our assumptions early?

Most software organizations today suffer from what I call, the “Inverted Testing Pyramid” problem. They spend maximum time and effort manually checking software. Some invest in automation, but mostly building slow, complex, fragile end-to-end GUI test. Very little effort is spent on building a solid foundation of unit & acceptance tests.

This over-investment in end-to-end tests is a slippery slope. Once you start on this path, you end up investing even more time & effort on testing which gives you diminishing returns.

In this session Naresh Jain will explain the key misconceptions that has lead to the inverted testing pyramid approach being massively adopted, main drawbacks of this approach and how to turn your organization around to get the right testing pyramid.

Posted in Agile, Continuous Deployment, Deployment, Testing | No Comments »

Tuesday, November 1st, 2011

“Release Early, Release Often” is a proven mantra, but what happens when you push this practice to it’s limits? .i.e. deploying latest code changes to the production servers every time a developer checks-in code?

At Industrial Logic, developers are deploying code dozens of times a day, rapidly responding to their customers and reducing their “code inventory”.

This talk will demonstrate our approach, deployment architecture, tools and culture needed for CD and how at Industrial Logic, we gradually got there.

Process/Mechanics

This will be a 60 mins interactive talk with a demo. Also has a small group activity as an icebreaker.

Key takeaway: When we started about 2 years ago, it felt like it was a huge step to achieve CD. Almost a all or nothing. Over the next 6 months we were able to break down the problem and achieve CD in baby steps. I think that approach we took to CD is a key take away from this session.

Talk Outline

- Context Setting: Need for Continuous Integration (3 mins)

- Next steps to CI (2 mins)

- Intro to Continuous Deployment (5 mins)

- Demo of CD at Freeset (for Content Delivery on Web) (10 mins) – a quick, live walk thru of how the deployment and servers are set up

- Benefits of CD (5 mins)

- Demo of CD for Industrial Logic’s eLearning (15 mins) – a detailed walk thru of our evolution and live demo of the steps that take place during our CD process

- Zero Downtime deployment (10 mins)

- CD’s Impact on Team Culture (5 mins)

- Q&A (5 mins)

Target Audience

- CTO

- Architect

- Tech Lead

- Developers

- Operations

Context

Industrial Logic’s eLearning context? number of changes, developers, customers , etc…?

Industrial Logic’s eLearning has rich multi-media interactive content delivered over the web. Our eLearning modules (called Albums) has pictures & text, videos, quizes, programming exercises (labs) in 5 different programming languages, packing system to validate & produce the labs, plugins for different IDEs on different platforms to record programming sessions, analysis engine to score student’s lab work in different languages, commenting system, reporting system to generate different kind of student reports, etc.

We have 2 kinds of changes, eLearning platform changes (requires updating code or configuration) or content changes (either code or any other multi-media changes.) This is managed by 5 distributed contributors.

On an average we’ve seen about 12 check-ins per day.

Our customers are developers, managers and L&D teams from companies like Google, GE Energy, HP, EMC, Philips, and many other fortune 100 companies. Our customers have very high expectations from our side. We have to demonstrate what we preach.

Learning outcomes

- General Architectural considerations for CD

- Tools and Cultural change required to embrace CD

- How to achieve Zero-downtime deploys (including databases)

- How to slice work (stories) such that something is deployable and usable very early on

- How to build different visibility levels such that new/experimental features are only visible to subset of users

- What Delivery tests do

- You should walk away with some good ideas of how your company can practice CD

Slides from Previous Talks

Posted in Agile, Continuous Deployment, Deployment, Lean Startup, Product Development, Testing, Tools | No Comments »

Friday, October 7th, 2011

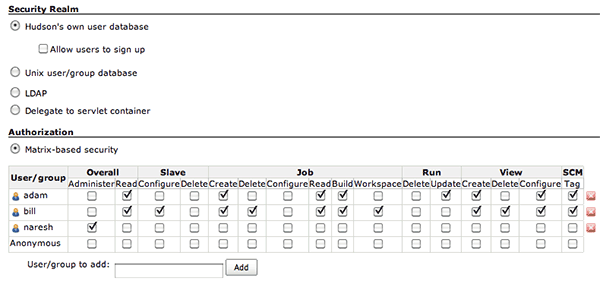

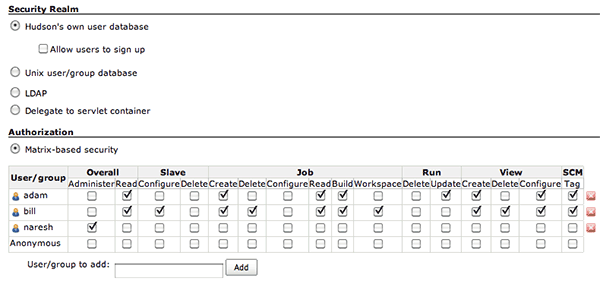

Today I was adding some new users to our Hudson CI Server. Since we use Husdon’s Own User Database, I had to add new users in Hudson. However after that, when the new user tried to login, the user kept getting the following error:

Access denied User is missing the Read permission.

Google did not reveal any thing obvious. Then I started looking at Hudson configuration and realized that we’re using Hudson’s Matrix-based security authorization.

So every time we add a new user, we have to add the same user here as well. What a pain! Ideally permissions should be a link right from the new user’s page.

I wish we stop using the Martix-based security authorization and instead just use “Anyone can do anything” option. One less administrative step.

Posted in Continuous Deployment, Tools, UX | No Comments »

Thursday, July 21st, 2011

Currently at Industrial Logic we use Nginx as a reverse proxy to our Tomcat web server cluster.

Today, while running a particular report with large dataset, we started getting timeouts errors. When we looked at the Nginx error.log, we found the following error:

[error] 26649#0: *9155803 upstream timed out (110: Connection timed out)

while reading response header from upstream,

client: xxx.xxx.xxx.xxx, server: elearning.industriallogic.com, request:

"GET our_url HTTP/1.1", upstream: "internal_server_url",

host: "elearning.industriallogic.com", referrer: "requested_url" |

[error] 26649#0: *9155803 upstream timed out (110: Connection timed out)

while reading response header from upstream,

client: xxx.xxx.xxx.xxx, server: elearning.industriallogic.com, request:

"GET our_url HTTP/1.1", upstream: "internal_server_url",

host: "elearning.industriallogic.com", referrer: "requested_url" After digging around for a while, I discovered that our web server is taking more than 60 secs to respond. Nginx has a directive called proxy_read_timeout which defaults to 60 secs. It determines how long nginx will wait to get the response to a request.

In nginx.conf file, setting proxy_read_timeout to 120 secs solved our problem.

server {

listen 80;

server_name elearning.industriallogic.com;

server_name_in_redirect off;

port_in_redirect off;

location / {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Real-Host $host;

proxy_read_timeout 120;

...

}

...

} |

server {

listen 80;

server_name elearning.industriallogic.com;

server_name_in_redirect off;

port_in_redirect off;

location / {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Real-Host $host;

proxy_read_timeout 120;

...

}

...

}

Posted in Continuous Deployment, Deployment, HTTP | 8 Comments »

Tuesday, June 21st, 2011

Time and again, I find developers (including myself) over-relying on our automated tests. esp. unit tests which run fast and reliably.

In the urge to save time today, we want to automate everything (which is good), but then we become blindfolded to this desire. Only later to realize that we’ve spent a lot more time debugging issues that our automated tests did not catch (leave the embarrassment caused because of this aside.)

I’ve come to believe:

A little bit of manual, sanity testing by the developer before checking in code can save hours of debugging and embarrassment.

Again this is contextual and needs personal judgement based on the nature of the change one makes to the code.

In addition to quickly doing some manual, sanity test on your machine before checking, it can be extremely important to do some exploratory testing as well. However, not always we can test things locally. In those cases, we can test them on a staging environment or on a live server. But its important for you to discover the issue much before your users encounter it.

P.S: Recently we faced Error CS0234: The type or namespace name ‘Specialized’ does not exist in the namespace ‘System.Collections’ issue, which prompted me to write this blog post.

Posted in Agile, Continuous Deployment, Deployment, Programming, Testing | 2 Comments »

Monday, April 18th, 2011

Building on top of my previous blog entry: Version Control Branching (extensively) Considered Harmful

I always discourage teams from preemptively branching a release candidate and then splitting their team to harden the release while rest of the team continues working on next release features.

My reasoning:

- Increases the work-in-progress and creates a lot of planning, management, version-control, testing, etc. overheads.

- In the grand scheme of things, we are focusing on resource utilization, but the throughput of the overall system is actually reducing.

- During development, teams get very focused on churning out features. Subconsciously they know there will be a hardening/optimization phase at the end, so they tend to cut corners for short-term speed gains. This attitude had a snowball effect. Overall encourages a “not-my-problem” attitude towards quality, performance and overall usability.

- The team (developers, testers and managers) responsible for hardening the release have to work extremely hard, under high pressure causing them to burn-out (and possibly introducing more problems into the system.) They have to suffer for the mistakes others have done. Does not seem like a fair system.

- Because the team is under high pressure to deliver the release, even though they know something really needs to be redesigned/refactored, they just patch it up. Constantly doing this, really creates a big ball of complex mud that only a few people understand.

- Creates a “Knowledge/Skill divide” between the developers and testers of the team. Generally the best (most trusted and knowledgable) members are pick up to work on the release hardening and performance optimization. They learn many interesting things while doing this. This newly acquired knowledge does not effectively get communicate back to other team members (mostly developers). Others continue doing what they used to do (potentially wrong things which the hardening team has to fix later.)

- As releases pass by, there are fewer and fewer people who understand the overall system and only they are able to effectively harden the project. This is a huge project risk.

- Over a period of time, every new release needs more hardening time due to the points highlighted above. This approach does not seem like a good strategy of getting out of the problem.

If something hurts, do it all the time to reduce the pain and get better at it.

Hence we should build release hardening as much as possible into the routine everyday work. If you still need hardening at the end, then instead of splitting the teams, we should let the whole swamp on making the release.

Also usually I notice that if only a subset of the team can effectively do the hardening, then its a good indication that the team is over-staffed and that might be one of the reasons for many problems in the first place. It might be worth considering down-sizing your team to see if some of those problems can be addressed.

Posted in Agile, Continuous Deployment, Learning, Organizational, Planning, Programming, Testing | No Comments »

Sunday, April 17th, 2011

Branching is a powerful tool in managing development and releases using a version control system. A branch produces a split in the code stream.

One recurring debate over the years is what goes on the trunk (main baseline) and what goes on the branch. How do you decide what work will happen on the trunk, and what will happen on the branch?

Here is my rule:

Branch rarely and branch as late as possible. .i.e. Branch only when its absolutely necessary.

You should branch whenever you cannot pursue and record two development efforts in one branch. The purpose of branching is to isolate development effort. Using a branch is always more involved than using the trunk, so the trunk should be used in majority of the cases, while the branch should be used in rare cases.

According to Ned, historically there were two branch types which solved most development problems:

- Fixes Branch: while feature work continues on the trunk, a fixes branch is created to hold the fixes to the latest shipped version of the software. This allows you to fix problems without having to wait for the latest crop of features to be finished and stabilized.

- Feature Branch: if a particular feature is disruptive enough or speculative enough that you don’t want the entire development team to have to suffer through its early stages, you can create a branch on which to do the work.

Continuous Delivery (and deployment) goes one step further and solves hard development problems and in the process avoiding the need for branching.

What are the problems with branching?

- Effort, Time and Cognitive overhead taken to merge code can be actually significant. The later we merge the worse it gets.

- Potential amount of rework and bugs introduced during the merging process is high

- Cannot dynamically create a CI build for each branch, hence lack of feedback

- Instead of making small, safe and testable changes to the code base, it encourages developers to make big bang, unsafe changes

P.S: I this blog, I’m mostly focusing on Client-Server (centralized) version control system. Distributed VCS (DVCS) have a very different working model.

Posted in Agile, Continuous Deployment, Deployment, Lean Startup, Product Development, Programming | 1 Comment »

|