`

| |

|

Archive for the ‘Analysis’ Category

Thursday, June 19th, 2014

Remember the dot-com days of Webvan and Pets.com? We took traditional businesses and gave then an online presence. Rapidly acquiring a large customer base was the sole goal of many dot-coms. “If we can get enough users, we can easily figure out how to monetize it.” And all of this made perfect sense expressed in dollars and cents. I know people who melted down Yahoo Finance’s servers by checking for their favourite stocks prices throughout the day, calculating their (paper) net worth in real time. If you were not part of this madness, you were certainly considered stupid.

But then on March 10, 2000, the perspective changed. Suddenly it became clear that this was really a bubble. Without having real profits (or even revenue/cash-flow), it was really just a house of cards. In hindsight, the entire dot-com burst made perfect sense. But why wasn’t this obvious to everyone (including me) to start with?

In complex adaptive system, the causality is retrospectively coherent. .i.e. hindsight does not lead to foresight. When we look back at the events, we can (relatively) easily construct a theory to explain the rationale behind the occurrence of these events. In fact, when we look back, the reasons are so obvious that one can easily be fooled into believing that “Only if we spend more time, carefully analysing and thinking through the situation at hand, we can completely avoid unwanted events in future.” Yet, time and again, we’ve always been caught by surprise and it almost appears to be impossible to predict such events ahead of time. Call it the Black Swan effect or whatever name you fancy.

This effect gives rise to a classic management dilemma – Predictability Paradox(pdf). In the zeal to improve the effectiveness and reliability of software development, managers institutionalise practices that unfortunately decrease, rather than increase, the predictability of the product’s success. Most companies spend an awful lot of effort and money to analyse the past, derive patterns and best practices, set targets and create processes to prevent past failure and produce ideal future goals. If software development was highly structured, if we had a stable environment and we had a good data points from million other projects, this approach might work. But for software development, which is a creative-problem solving domain, with high levels of uncertainty and each project having an unique context, these techniques (best practices) are rather dangerous.

In our domain,

- We need to break the vague problem down into small safe-fail experiments.

- Then execute each experiment in short iterative and incremental cycles.

- We need to focus on tight feedback loops, which will help us adapt & co-evolve the system. (We cannot be stuck with analysis paralysis.)

- We need to probe the system with experiments and find emergent practices.

- And then apply these practices in a given context, for a short duration.

- Speed and Sustainability are extremely important factors.

This is what I mean when I say “Action Precedes Clarity”.

Posted in Agile, Analysis, Cognitive Science, Experiment, Lean Startup | 3 Comments »

Tuesday, March 27th, 2012

Many friends responded to my previous post on How Much Should You Think about the Long-Term? saying:

Even if the future is uncertain and we know it will change, we should always plan for the long-term. Without a plan, we cease to move forward.

I’m not necessarily in favor or against this philosophy. However I’m concerned about the following points:

- Effort: The effort required to put together an initial direction is very different from the effort required to put a (proper) plan together. Is that extra effort really worth it esp. when we know things will change?

- Attachment: Sometimes we get attached with our plans. Even when we see things are not quite inline with our plan, we think, its because we’ve not given enough time or we’ve not done justice to it.

- Conditioned: Sometimes I notice that when we have a plan in place, knowingly or unknowingly we stop watching out for certain things. Mentally we are at ease and we build a shield around us. We get in the groove of our plan and miss some wonderful opportunities along the way.

The amount of planning required seems to be directly proportional to the size of your team.

If your team consists of a couple of people, you can go fairly lightweight. And that’s my long-term plan to deal with uncertainty.

Posted in Agile, Analysis, Cognitive Science, Learning, Organizational, Planning | 2 Comments »

Tuesday, March 27th, 2012

Often people tell you that “You should think about the long-term.”

Sometimes people tell you, “Forget long-term, its too vague, but you should at least think beyond the near-term.”

Really?

Unfortunately, part of my brain (prefrontal cortex), which can see and analyze the future, has failed to develop compared to the other smart beings.

At times, I try to fool myself saying I can anticipate the future, but usually when I get there (future) its quite different. I realize that the way I think about the future is fundamentally flawed. I take the present and fill it with random guesses about something that might happen. But I always miss things that I’m not aware of or not exposed to.

In today’s world, when there are a lot of new ideas/stuff going around us, I’m amazed how others can project themselves into the future and plan their long-terms?

Imagine a tech-company planning their long-term plan, 5-years ago, when there were no iPads/tablets. They all must have guessed a tablet revolution and accounted that in their long-term plans. Or even if they did not, it would have been easy for them to embrace it right?

You could argue that the tablet revolution is a one-off phenomenon or an outlier. Generally things around here are very predictable and we can plan our long-term without an issue. Global economics, stability of government, rules and regulations, emergence of new technologies, new markets, movement of people, changes in their aspirations, environmental issues, none of these impact us in any way.

Good for you! Unfortunately I don’t live in a world like that (or at least don’t fool myself thinking about the world that way.)

By now, you must be aware that we live in a complex adaptive world and we humans ourselves are complex adaptive system. In complex adaptive system, the causality is retrospectively coherent. .i.e. hindsight does not lead to foresight.

10 years ago, when I first read about YAGNI and DTSTTCPW, I thought that was profound. It was against the common wisdom of how people designed software. Software development has come a long way since then. But is XP the best way to build software? Don’t know. Question is, people who used these principles did they build great systems? Answer is: Yes, quite a few of them.

In essence, I think one should certainly think about the future, make reasonable (quick) guesses and move on. Key is to always keep an open mind and listen to subtle changes around us.

One cannot solely rely on their “intuition” about long term. Arguing on things you’ve all only guessed seems like a huge waste of time and effort. Remember there is a point of diminishing returns and more time you spend thinking long-term, less your chances are of getting it right.

I’m a strong believer of “Action Precedes Clarity!”

Update: In response to many comments: When the Future is Uncertain, How Important is A Long-Term Plan?

Posted in Agile, Analysis, Cognitive Science, Learning, Organizational, Planning | 1 Comment »

Tuesday, November 1st, 2011

Many product companies struggle with a big challenge: how to identify a Minimal Viable Product that will let them quickly validate their product hypothesis?

Teams that share the product vision and agree on priorities for features are able to move faster and more effectively.

During this workshop, we’ll take a hypothetical product and coach you on how to effectively come up with an evolutionary roadmap for your product.

This day long workshop teaches you how to collaborate on the vision of the product and create a Product Backlog, a User Story map and a pragmatic Release Plan.

Detailed Activity Breakup

- PART 1: UNDERSTAND PRODUCT CONTEXT

- Introduction

- Define Product Vision

- Identify Users That Matter

- Create User Personas

- Define User Goals

- A Day-In-Life Of Each Persona

- PART 2: BUILD INITIAL STORY MAP FROM ACTIVITY MODEL

- Prioritize Personas

- Break Down Activities And Tasks From User Goals

- Lay Out Goals Activities And Tasks

- Walk Through And Refine Activity Model

- PART 3: CREATE FIRST-CUT PRODUCT ROAD MAP

- Prioritize High Level Tasks

- Define Themes

- Refine Tasks

- Define Minimum Viable Product

- Identify Internal And External Release Milestones

- PART 4: WRITE USER STORIES FOR THE FIRST RELEASE

- Define User Task Level Acceptance Criteria

- Break Down User Tasks To User Stories Based On Acceptance Criteria

- Refine Acceptance Criteria For Each Story

- Find Ways To Further Thin-Slice User Stories

- Capture Assumptions And Non-Functional Requirements

- PART 5: REFINE FIRST INTERNAL RELEASE BASED ON ESTIMATES

- Define Relative Size Of User Stories

- Refine Internal Release Milestones For First-Release Based On Estimates

- Define Goals For Each Release

- Refine Product And Project Risks

- Present And Commit To The Plan

- PART 6: RETROSPECTIVE

- Each part will take roughly 30 mins.

I’ve facilitated this workshop for many organizations (small-startups to large enterprises.)

More details: Product Discovery Workshop from Industrial Logic

Techniques

Focused Break-Out Sessions, Group Activities, Interactive Dialogues, Presentations, Heated Debates/Discussions and Some Fun Games

Target Audience

- Product Owner

- Release/Project Manager

- Subject Matter Expert, Domain Expert, or Business Analyst

- User Experience team

- Architect/Tech Lead

- Core Development Team (including developers, testers, DBAs, etc.)

This tutorial can take max 30 people. (3 teams of 10 people each.)

Workshop Prerequisites

Required: working knowledge of Agile (iterative and incremental software delivery models) Required: working knowledge of personas, users stories, backlogs, acceptance criteria, etc.

Testimonials

“I come away from this workshop having learned a great deal about the process and equally about many strategies and nuances of facilitating it. Invaluable!

Naresh Jain clearly has extensive experience with the Product Discovery Workshop. He conveyed the principles and practices underlying the process very well, with examples from past experience and application to the actual project addressed in the workshop. His ability to quickly relate to the project and team members, and to focus on the specific details for the decomposition of this project at the various levels (goals/roles, activities, tasks), is remarkable and a good example for those learning to facilitate the workshop.

Key take-aways for me include the technique of acceptance criteria driven decomposition, and the point that it is useful to map existing software to provide a baseline framework for future additions.”

Doug Brophy, Agile Expert, GE Energy

Learning outcomes

- Understand the thought process and steps involved during a typical product discovery and release planning session

- Using various User-Centered Design techniques, learn how to create a User Story Map to help you visualize your product

- Understand various prioritization techniques that work at the Business-Goal and User-Persona Level

- Learn how to decompose User Activities into User Tasks and then into User Stories

- Apply an Acceptance Criteria-Driven Discovery approach to flush out thin slices of functionality that cut across the system

- Identify various techniques to narrow the scope of your releases, without reducing the value delivered to the users

- Improve confidence and collaboration between the business and engineering teams

- Practice key techniques to work in short cycles to get rapid feedback and reduce risk

Posted in Agile, agile india, Analysis, Coaching, Conference, Design, Lean Startup, Planning, Product Development, Training | No Comments »

Saturday, December 4th, 2010

Every day I hear horror stories of how developers are harassed by managers and customers for not having predictable/stable velocity. Developers are penalized when their estimates don’t match their actuals.

If I understand correctly, the reason we moved to story points was to avoid this public humiliation of developers by their managers and customers.

Its probably helped some teams but vast majority of teams today are no better off than before, except that now they have this one extract level of indirection because of story points and then velocity.

We can certainly blame the developers and managers for not understanding story points in the first place. But will that really solve the problem teams are faced with today?

Please consider reading my blog on Story Points are Relative Complexity Estimation techniques. It will help you understand what story points are.

Assuming you know what story point estimates are. Let’s consider that we have some user stories with different story points which help us understand relative complexity estimate.

Then we pick up the most important stories (with different relative complexities) and try to do those stories in our next iteration/sprint.

Let’s say we end up finishing 6 user stories at the end of this iteration/sprint. We add up all the story points for each user story which was completed and we say that’s our velocity.

Next iteration/sprint, we say we can roughly pick up same amount of total story points based on our velocity. And we plan our iterations/sprints this way. We find an oscillating velocity each iteration/sprint, which in theory should normalize over a period of time.

But do you see a fundamental error in this approach?

First we said, 2-story points does not mean 2 times bigger than 1-story point. Let’s say to implement a 1-point story it might take 6 hrs, while to implement a 2-point story it takes 9 hrs. Hence we assigned random numbers (Fibonacci series) to story points in the first place. But then we go and add them all up.

If you still don’t get it, let me explain with an example.

In the nth iteration/sprint, we implemented 6 stories:

- Two 1-point story

- Two 3-point stories

- One 5-point story

- One 8-point story

So our total velocity is ( 2*1 + 2*3 + 5 + 8 ) = 21 points. In 2 weeks we got 21 points done, hence our velocity is 21.

Next iteration/sprit, we’ll take:

* Twenty One 1-point stories

Take a wild guess what would happen?

Yeah I know, hence we don’t take just one iteration/sprint’s velocity, we take an average across many iterations/sprints.

But its a real big stretch to take something which was inherently not meant to be mathematical or statistical in nature and calculate velocity based on it.

If velocity anyway averages out over a period of time, then why not just count the number of stories and use them as your velocity instead of doing story-points?

Over a period of time stories will roughly be broken down to similar size stories and even if they don’t, they will average out.

Isn’t that much simpler (with about the same amount of error) than doing all the story point business?

I used this approach for few years and did certainly benefit from it. No doubt its better than effort estimation upfront. But is this the best we can do?

I know many teams who don’t do effort estimation or relative complexity estimation and moved to a flow model instead of trying to fit thing into the box.

Consider reading my blog on Estimations Considered Harmful.

Posted in Agile, Analysis, Planning | 9 Comments »

Saturday, December 4th, 2010

If we have 2 user stories (A and B), I can say A is smaller than B hence, A is less story points compared to B.

But what does “smaller” mean?

- Less Complex to Understand

- Smaller set of acceptance criteria

- Have prior experience doing something similar to A compared to B

- Have a rough (better/clearer) idea of what needs to be done to implement A compared to B

- A is less volatile and vague compared to B

- and so on…

So, A is less story points compared to B. But clearly we don’t know how much longer its going to take to implement A or B.

Hence we don’t know how much more effort and time will be required to implement B compared to A. All we know at this point is, A is smaller than B.

It is important to understand that Story points are relative complexity estimate NOT effort estimation (how many people, how long will it take?) technique.

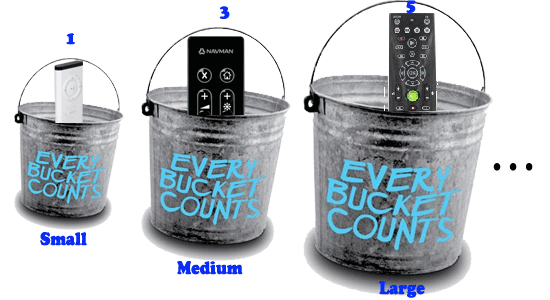

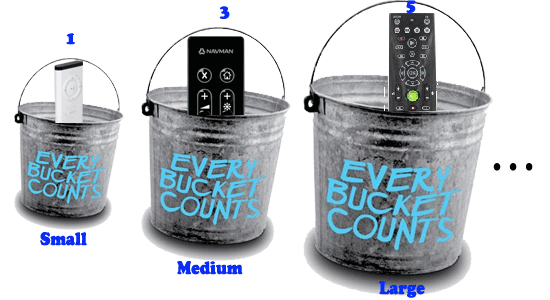

Now if we had 5 stories (A, B, C, D and E) and applied the same thinking, we can come up with different buckets in which we can put these stories in.

Small, Medium, Large, XL, XXL and so on….

And then we can say all stories in the small bucket are 1 story point. All stories in medium bucket are 3 story points, 5 story points, 8 story points and so on…

Why do we give these random numbers instead of sequential numbers (1,2,3,4,…)?

Because we don’t want people to get confused that medium story with 2 points is 2 times bigger than a small story with 1 point.

We cannot apply mathematics or statistics to story points.

Posted in Agile, Analysis | 2 Comments »

Saturday, July 10th, 2010

A prioritized user story backlog helps to understand what to do next, but is a difficult tool for understanding what your whole system is intended to do. A user story map arranges user stories into a useful model to help understand the functionality of the system, identify holes and omissions in your backlog, and effectively plan holistic releases that delivery value to users and business with each release.

Posted in Agile, agile india, Analysis, Coaching, Planning, post modern agile, Product Development | 2 Comments »

Sunday, July 4th, 2010

In this 60 mins tutorial presented by Tarang Baxi, Chirag Doshi and Dhaval Doshi at the Agile Mumbai 2010 conference, he demonstrates and discusses various business analysis anti-patterns, particularly as they apply to and impact agile projects. These anti-patterns range from BA behavior with customers to BA behavior with their own team members.

Posted in Agile, agile india, Analysis, Community, Conference | 1 Comment »

Tuesday, April 20th, 2010

At the Agile Coach Camp Goa 2010, we had a small side discussion about the difference between Use Case and User Stories. More importantly, if an Use Case contains many User stories or whether an User Story contains many Use Cases.

According to Mike Cohn, User Stories are smaller in scope compared to Use Cases.

Even Martin Fowler has the same understanding.

IMHO it does not matter. But it’s important to note that when some people refer to User Stories, they really mean the final stage of the User Story. Hence they always say, an Use Case contains many User Stories. In real world, I see User Stories have a life-cycle. They start out big & vague and gradually are thin sliced to executable User Stories. Mike Cohn referes to them as Theme > Epic > Story > Task.

I’m particularly influenced by Jeff Patton’s Work on this topic. Jeff highlights that User Stories really need to be at an User Goal level rather than an implementation level (at least when you start out). Else it would lead to big-upfront-design. Also most users won’t be able to relate to really granular stories. Highly recommend reading his blog on The Mystery of Shrinking Stories.

To understand the overall approach check out his User Story Mapping Slides.

Posted in Agile, Analysis, Design, Planning | 2 Comments »

Friday, May 29th, 2009

Why do some authors call their tutorials as Cook books?

Its a collection of guidelines (recipes) to use the software. Very similar to cookbooks or recipe books.

Cooking has been used a metaphor/analogy for software development for many decades now. Some people have even compared Developers to Chefs, [poor] Analysts to Waiters and so on.

I find a very close resemblance between the way I cook food and the way I build software.

- Both an very much an iterative and incremental process. Big bang approaches don’t work.

- Very heavy focus on feedback and testing (tasting, smelling, feeling the texture, etc) early on and continuously throughout. We don’t cook the whole meal and then check it. The whole cooking process if very feedback driven.

- Like Software, each meal has many edible food items (features) in it. Each food item has basic ingredients (that fills your stomach)[skeleton; must-have-part of the feature], ingredients that give the taste, color & meaning to the food and ingredients that decorates the food [esthetics]. We prioritize and thin slice to get early feedback and to take baby steps.

- Like in software, fresh ingredients [new feature ideas] are more healthy and sustainable.

- Cooking is an art. You get better at it by practicing it. There are no crash courses that can make you a master cook.

- Cooking has some fundamental underlying principles that can be applied to different styles of cooking and to different cuisines. Similarly in software we have different schools of thoughts and different frameworks/technologies where those fundamental principles can be applied.

- We have lots of recipe books for cooking. 2 different cooks can take the same recipe and come up with quite different food (taste, odor, color, texture, appeal, etc). A good cook (someone with quality experience) knows how to take a recipe and make wonderful food out of it. Other get caught up in the recipe. They miss the whole point of cooking and enjoying food.

- Efficiency can vary drastically between a good cook and a bad cook. A good cook can deliver tasty food up to 10 times faster than a lousy cook.

- Cooking needs passion and risk taking attitude. A passionate cook, willing to try something new, can get very creative with cooking. Can deliver great results with limited resources. Someone lacking the passion will not deliver any edible food, even if they are give all the resources in the world.

- Cooking has a creative, experimental side to it. Mixing different styles of cooking can leading to wonderful results.

- Cooking is a constant learning and exploratory process. This is what adds all the fun to cooking. Not cooking the same old stuff by reading the manual.

- In cooking, there are guidelines no rules. One with discipline and one who has internalized the guidelines can cook far better than the one stuck with the rules and processes.

- “Many cooks spoil the broth”. You can’t violate Mythical Man Month.

Also if we broaden the analogy to Restaurant business, we can see some other interesting aspects.

Posted in Agile, Analysis, Design, Programming, Testing | 2 Comments »

|