Inverting the Testing Pyramid

As more and more companies are moving to the Cloud, they want their latest, greatest software features to be available to their users as quickly as they are built. However there are several issues blocking them from moving ahead.

One key issue is the massive amount of time it takes for someone to certify that the new feature is indeed working as expected and also to assure that the rest of the features will continuing to work. In spite of this long waiting cycle, we still cannot assure that our software will not have any issues. In fact, many times our assumptions about the user’s needs or behavior might itself be wrong. But this long testing cycle only helps us validate that our assumptions works as assumed.

How can we break out of this rut & get thin slices of our features in front of our users to validate our assumptions early?

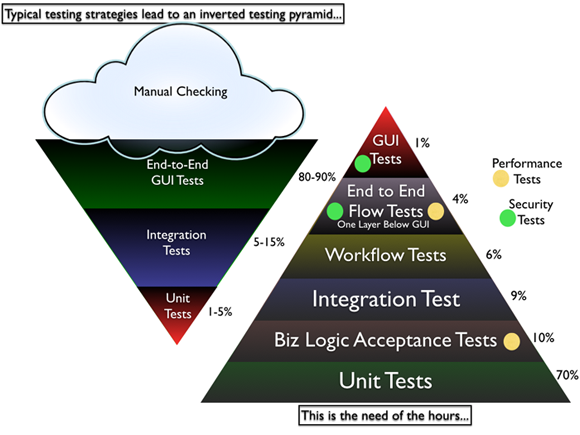

Most software organizations today suffer from what I call, the “Inverted Testing Pyramid” problem. They spend maximum time and effort manually checking software. Some invest in automation, but mostly building slow, complex, fragile end-to-end GUI test. Very little effort is spent on building a solid foundation of unit & acceptance tests.

This over-investment in end-to-end tests is a slippery slope. Once you start on this path, you end up investing even more time & effort on testing which gives you diminishing returns.

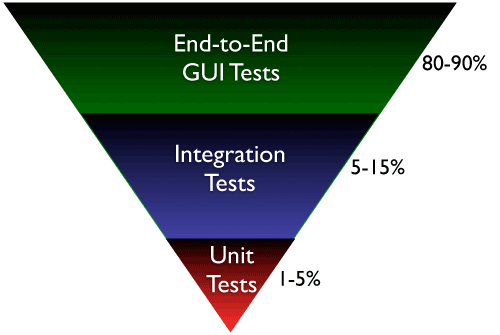

They end up with majority (80-90%) of their tests being end-to-end GUI tests. Some effort is spent on writing so-called “Integration test” (typically 5-15%.) Resulting in a shocking 1-5% of their tests being unit/micro tests.

Why is this a problem?

- The base of the pyramid is constructed from end-to-end GUI test, which are famous for their fragility and complexity. A small pixel change in the location of a UI component can result in test failure. GUI tests are also very time-sensitive, sometimes resulting in random failure (false-negative.)

- To make matters worst, most teams struggle automating their end-to-end tests early on, which results in huge amount of time spent in manual regression testing. Its quite common to find test teams struggling to catch up with development. This lag causes many other hard-development problems.

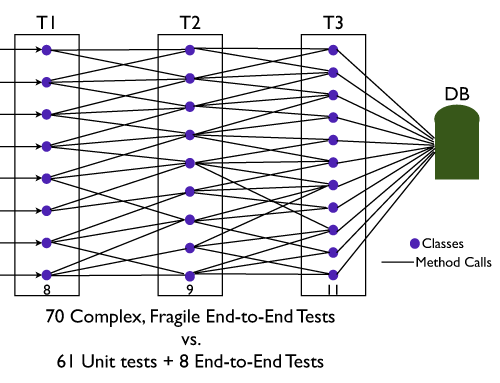

- Number of end-to-end tests required to get a good coverage is much higher and more complex than the number of unit tests + selected end-to-end tests required. (BEWARE: Don’t be Seduced by Code Coverage Numbers)

- Maintain a large number of end-to-end tests is quite a nightmare for teams. Following are some core issues with end-to-end tests:

- It requires deep domain knowledge and high technical skills to write quality end-to-end tests.

- They take a lot of time to execute.

- They are relatively resource intensive.

- Testing negative paths in end-to-end tests is very difficult (or impossible) compared to lower level tests.

- When an end-to-end test fails, we don’t get pin-pointed feedback about what went wrong.

- They are more tightly coupled with the environment and have external dependencies, hence fragile. Slight changes to the environment can cause the tests to fail. (false-negative.)

- From a refactoring point of view, they don’t give the same comfort feeling to developers as unit tests can give.

Again don’t get me wrong. I’m not suggesting end-to-end integration tests are a scam. I certainly think they have a place and time.

Imagine, an automobile company building an automobile without testing/checking the bolts, nuts all the way up to the engine, transmission, breaks, etc. And then just assembling the whole thing somehow and asking you to drive it. Would you test drive that automobile? But you will see many software companies using this approach to building software.

What I propose and help many organizations achieve is the right balance of end-to-end tests, acceptance tests and unit tests. I call this “Inverting the Testing Pyramid.” [Inspired by Jonathan Wilson’s book called Inverting The Pyramid: The History Of Football Tactics].

In a later blog post I can quickly highlight various tactics used to invert the pyramid.

Update: I recently came across Alister Scott’s blog on Introducing the software testing ice-cream cone (anti-pattern). Strongly suggest you read it.